Standford CS231n 2017 Summary

Table of contents

Course Info

Website: http://cs231n.stanford.edu/

Full syllabus link: http://cs231n.stanford.edu/syllabus.html

Assignments solutions: https://github.com/Burton2000/CS231n-2017

Number of lectures: 16

Course description:

Computer Vision has become ubiquitous in our society, with applications in search, image understanding, apps, mapping, medicine, drones, and self-driving cars. Core to many of these applications are visual recognition tasks such as image classification, localization and detection. Recent developments in neural network (aka “deep learning”) approaches have greatly advanced the performance of these state-of-the-art visual recognition systems. This course is a deep dive into details of the deep learning architectures with a focus on learning end-to-end models for these tasks, particularly image classification. During the 10-week course, students will learn to implement, train and debug their own neural networks and gain a detailed understanding of cutting-edge research in computer vision. The final assignment will involve training a multi-million parameter convolutional neural network and applying it on the largest image classification dataset (ImageNet). We will focus on teaching how to set up the problem of image recognition, the learning algorithms (e.g. backpropagation), practical engineering tricks for training and fine-tuning the networks and guide the students through hands-on assignments and a final course project. Much of the background and materials of this course will be drawn from the ImageNet Challenge.

01. Introduction to CNN for visual recognition

A brief history of Computer vision starting from the late 1960s to 2017.

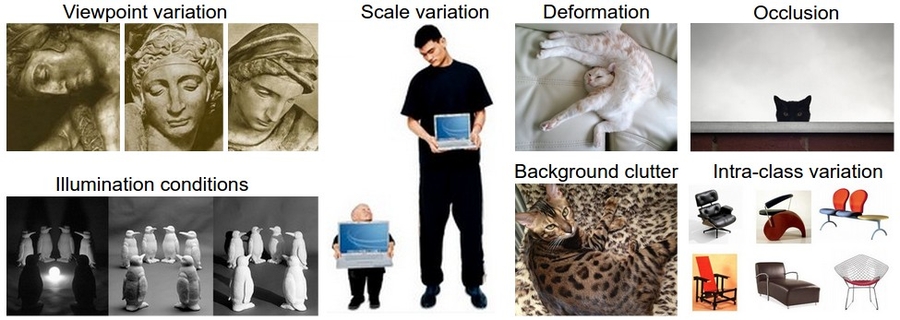

Computer vision problems includes image classification, object localization, object detection, and scene understanding.

Imagenet is one of the biggest datasets in image classification available right now.

Starting 2012 in the Imagenet competition, CNN (Convolutional neural networks) is always winning.

CNN actually has been invented in 1997 by Yann Lecun.

02. Image classification

Image classification problem has a lot of challenges like illumination and viewpoints.

An image classification algorithm can be solved with K nearest neighborhood (KNN) but it can poorly solve the problem. The properties of KNN are:

Hyperparameters of KNN are: k and the distance measure

K is the number of neighbors we are comparing to.

Distance measures include:

L2 distance (Euclidean distance)

Best for non coordinate points

L1 distance (Manhattan distance)

Best for coordinate points

Hyperparameters can be optimized using Cross-validation as following (In our case we are trying tp predict K):

Split your dataset into

ffolds.Given predicted hyperparameters:

Train your algorithm with f-1 folds and test it with the remain flood. and repeat this with every fold.

Choose the hyperparameters that gives the best training values (Average over all folds)

Linear SVM classifier is an option for solving the image classification problem, but the curse of dimensions makes it stop improving at some point.

Logistic regression is a also a solution for image classification problem, but image classification problem is non linear!

Linear classifiers has to run the following equation:

Y = wX + bshape of

wis the same asxand shape ofbis 1.

We can add 1 to X vector and remove the bias so that:

Y = wXshape of

xisoldX+1andwis the same asx

We need to know how can we get

w's andb's that makes the classifier runs at best.

03. Loss function and optimization

In the last section we talked about linear classifier but we didn't discussed how we could train the parameters of that model to get best

w's andb's.We need a loss function to measure how good or bad our current parameters.

Then we find a way to minimize the loss function given some parameters. This is called optimization.

Loss function for a linear SVM classifier:

L[i] = Sum where all classes except the predicted class (max(0, s[j] - s[y[i]] + 1))We call this the hinge loss.

Loss function means we are happy if the best prediction are the same as the true value other wise we give an error with 1 margin.

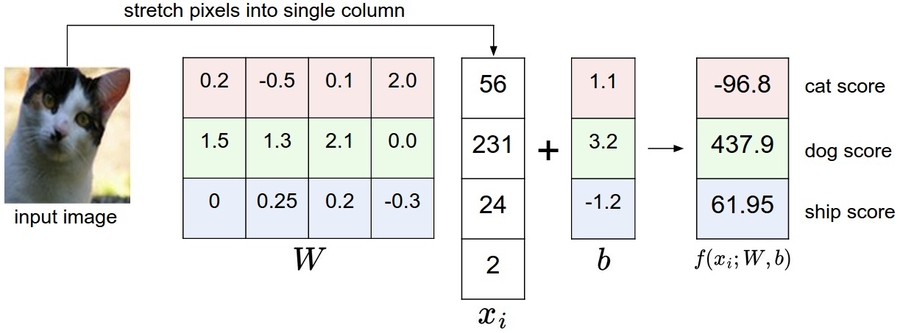

Example:

Given this example we want to compute the loss of this image.

L = max (0, 437.9 - (-96.8) + 1) + max(0, 61.95 - (-96.8) + 1) = max(0, 535.7) + max(0, 159.75) = 695.45Final loss is 695.45 which is big and reflects that the cat score needs to be the best over all classes as its the lowest value now. We need to minimize that loss.

Its OK for the margin to be 1. But its a hyperparameter too.

If your loss function gives you zero, are this value is the same value for your parameter? No there are a lot of parameters that can give you best score.

You’ll sometimes hear about people instead using the squared hinge loss SVM (or L2-SVM). that penalizes violated margins more strongly (quadratically instead of linearly). The unsquared version is more standard, but in some datasets the squared hinge loss can work better.

We add regularization for the loss function so that the discovered model don't overfit the data.

Where

Ris the regularizer, andlambdais the regularization term.

There are different regularizations techniques:

- RegularizerEquationComments

L2

R(W) = Sum(W^2)Sum all the W squared

L1

R(W) = Sum(lWl)Sum of all Ws with abs

Elastic net (L1 + L2)

R(W) = beta * Sum(W^2) + Sum(lWl)Dropout

No Equation

Regularization prefers smaller

Ws over bigWs.Regularizations is called weight decay. biases should not included in regularization.

Softmax loss (Like linear regression but works for more than 2 classes):

Softmax function:

Sum of the vector should be 1.

Softmax loss:

Log of the probability of the good class. We want it to be near 1 thats why we added a minus.

Softmax loss is called cross-entropy loss.

Consider this numerical problem when you are computing Softmax:

Optimization:

How we can optimize loss functions we discussed?

Strategy one:

Get a random parameters and try all of them on the loss and get the best loss. But its a bad idea.

Strategy two:

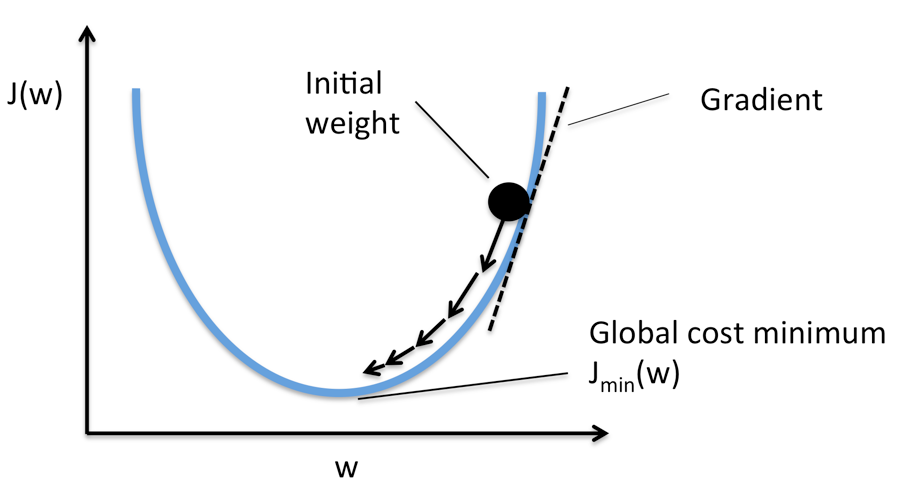

Follow the slope.

Image source.

Our goal is to compute the gradient of each parameter we have.

Numerical gradient: Approximate, slow, easy to write. (But its useful in debugging.)

Analytic gradient: Exact, Fast, Error-prone. (Always used in practice)

After we compute the gradient of our parameters, we compute the gradient descent:

learning_rate is so important hyper parameter you should get the best value of it first of all the hyperparameters.

stochastic gradient descent:

Instead of using all the date, use a mini batch of examples (32/64/128 are commonly used) for faster results.

04. Introduction to Neural network

Computing the analytic gradient for arbitrary complex functions:

What is a Computational graphs?

Used to represent any function. with nodes.

Using Computational graphs can easy lead us to use a technique that called back-propagation. Even with complex models like CNN and RNN.

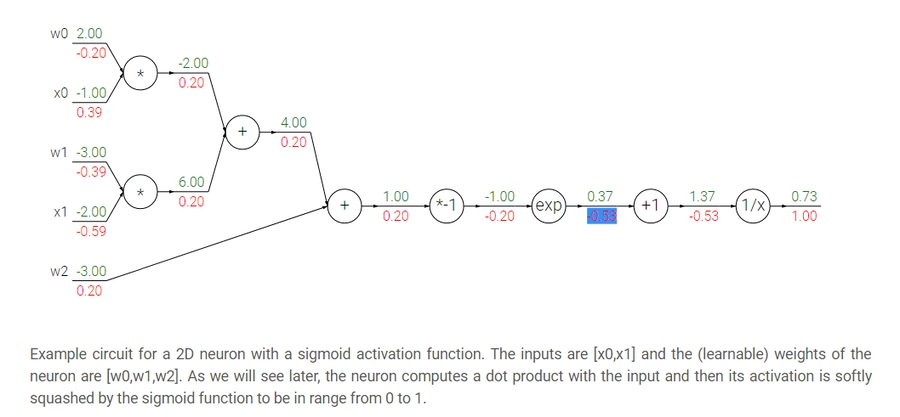

Back-propagation simple example:

Suppose we have

f(x,y,z) = (x+y)zThen graph can be represented this way:

We made an intermediate variable

qto hold the values ofx+yThen we have:

Then:

So in the Computational graphs, we call each operation

f. For eachfwe calculate the local gradient before we go on back propagation and then we compute the gradients in respect of the loss function using the chain rule.In the Computational graphs you can split each operation to as simple as you want but the nodes will be a lot. if you want the nodes to be smaller be sure that you can compute the gradient of this node.

A bigger example:

Hint: the back propagation of two nodes going to one node from the back is by adding the two derivatives.

Modularized implementation: forward/ backward API (example multiply code):

If you look at a deep learning framework you will find it follow the Modularized implementation where each class has a definition for forward and backward. For example:

Multiplication

Max

Plus

Minus

Sigmoid

Convolution

So to define neural network as a function:

(Before) Linear score function:

f = Wx(Now) 2-layer neural network:

f = W2*max(0,W1*x)Where max is the RELU non linear function

(Now) 3-layer neural network:

f = W3*max(0,W2*max(0,W1*x)And so on..

Neural networks is a stack of some simple operation that forms complex operations.

05. Convolutional neural networks (CNNs)

Neural networks history:

First perceptron machine was developed by Frank Rosenblatt in 1957. It was used to recognize letters of the alphabet. Back propagation wasn't developed yet.

Multilayer perceptron was developed in 1960 by Adaline/Madaline. Back propagation wasn't developed yet.

Back propagation was developed in 1986 by Rumeelhart.

There was a period which nothing new was happening with NN. Cause of the limited computing resources and data.

In 2006 Hinton released a paper that shows that we can train a deep neural network using Restricted Boltzmann machines to initialize the weights then back propagation.

The first strong results was in 2012 by Hinton in speech recognition. And the Alexnet "Convolutional neural networks" that wins the image net in 2012 also by Hinton's team.

After that NN is widely used in various applications.

Convolutional neural networks history:

Hubel & Wisel in 1959 to 1968 experiments on cats cortex found that there are a topographical mapping in the cortex and that the neurons has hireical organization from simple to complex.

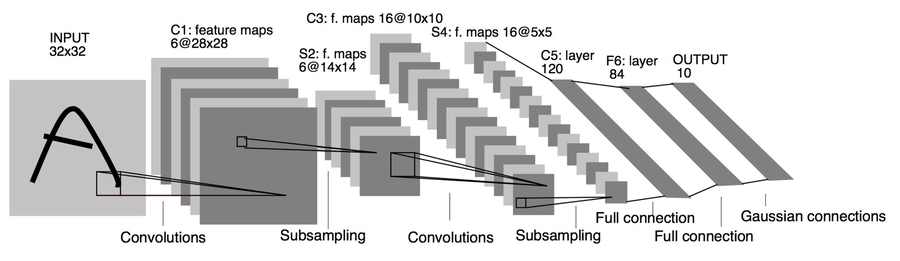

In 1998, Yann Lecun gives the paper Gradient-based learning applied to document recognition that introduced the Convolutional neural networks. It was good for recognizing zip letters but couldn't run on a more complex examples.

In 2012 AlexNet used the same Yan Lecun architecture and won the image net challenge. The difference from 1998 that now we have a large data sets that can be used also the power of the GPUs solved a lot of performance problems.

Starting from 2012 there are CNN that are used for various tasks (Here are some applications):

Image classification.

Image retrieval.

Extracting features using a NN and then do a similarity matching.

Object detection.

Segmentation.

Each pixel in an image takes a label.

Face recognition.

Pose recognition.

Medical images.

Playing Atari games with reinforcement learning.

Galaxies classification.

Street signs recognition.

Image captioning.

Deep dream.

ConvNet architectures make the explicit assumption that the inputs are images, which allows us to encode certain properties into the architecture.

There are a few distinct types of Layers in ConvNet (e.g. CONV/FC/RELU/POOL are by far the most popular)

Each Layer may or may not have parameters (e.g. CONV/FC do, RELU/POOL don’t)

Each Layer may or may not have additional hyperparameters (e.g. CONV/FC/POOL do, RELU doesn’t)

How Convolutional neural networks works?

A fully connected layer is a layer in which all the neurons is connected. Sometimes we call it a dense layer.

If input shape is

(X, M)the weighs shape for this will be(NoOfHiddenNeurons, X)

Convolution layer is a layer in which we will keep the structure of the input by a filter that goes through all the image.

We do this with dot product:

W.T*X + b. This equation uses the broadcasting technique.So we need to get the values of

WandbWe usually deal with the filter (

W) as a vector not a matrix.

We call output of the convolution activation map. We need to have multiple activation map.

Example if we have 6 filters, here are the shapes:

Input image

(32,32,3)filter size

(5,5,3)We apply 6 filters. The depth must be three because the input map has depth of three.

Output of Conv.

(28,28,6)if one filter it will be

(28,28,1)

After RELU

(28,28,6)Another filter

(5,5,6)Output of Conv.

(24,24,10)

It turns out that convNets learns in the first layers the low features and then the mid-level features and then the high level features.

After the Convnets we can have a linear classifier for a classification task.

In Convolutional neural networks usually we have some (Conv ==> Relu)s and then we apply a pool operation to downsample the size of the activation.

What is stride when we are doing convolution:

While doing a conv layer we have many choices to make regarding the stride of which we will take. I will explain this by examples.

Stride is skipping while sliding. By default its 1.

Given a matrix with shape of

(7,7)and a filter with shape(3,3):If stride is

1then the output shape will be(5,5)# 2 are droppedIf stride is

2then the output shape will be(3,3)# 4 are droppedIf stride is

3it doesn't work.

A general formula would be

((N-F)/stride +1)If stride is

1thenO = ((7-3)/1)+1 = 4 + 1 = 5If stride is

2thenO = ((7-3)/2)+1 = 2 + 1 = 3If stride is

3thenO = ((7-3)/3)+1 = 1.33 + 1 = 2.33# doesn't work

In practice its common to zero pad the border.

# Padding from both sides.Give a stride of

1its common to pad to this equation:(F-1)/2where F is the filter sizeExample

F = 3==> Zero pad with1Example

F = 5==> Zero pad with2

If we pad this way we call this same convolution.

Adding zeros gives another features to the edges thats why there are different padding techniques like padding the corners not zeros but in practice zeros works!

We do this to maintain our full size of the input. If we didn't do that the input will be shrinking too fast and we will lose a lot of data.

Example:

If we have input of shape

(32,32,3)and ten filters with shape is(5,5)with stride1and pad2Output size will be

(32,32,10)# We maintain the size.

Size of parameters per filter

= 5*5*3 + 1 = 76All parameters

= 76 * 10 = 76

Number of filters is usually common to be to the power of 2.

# To vectorize well.So here are the parameters for the Conv layer:

Number of filters K.

Usually a power of 2.

Spatial content size F.

3,5,7 ....

The stride S.

Usually 1 or 2 (If the stride is big there will be a downsampling but different of pooling)

Amount of Padding

If we want the input shape to be as the output shape, based on the F if 3 its 1, if F is 5 the 2 and so on.

Pooling makes the representation smaller and more manageable.

Pooling Operates over each activation map independently.

Example of pooling is the maxpooling.

Parameters of max pooling is the size of the filter and the stride"

Example

2x2with stride2# Usually the two parameters are the same 2 , 2

Also example of pooling is average pooling.

In this case it might be learnable.

06. Training neural networks I

As a revision here are the Mini batch stochastic gradient descent algorithm steps:

Loop:

Sample a batch of data.

Forward prop it through the graph (network) and get loss.

Backprop to calculate the gradients.

Update the parameters using the gradients.

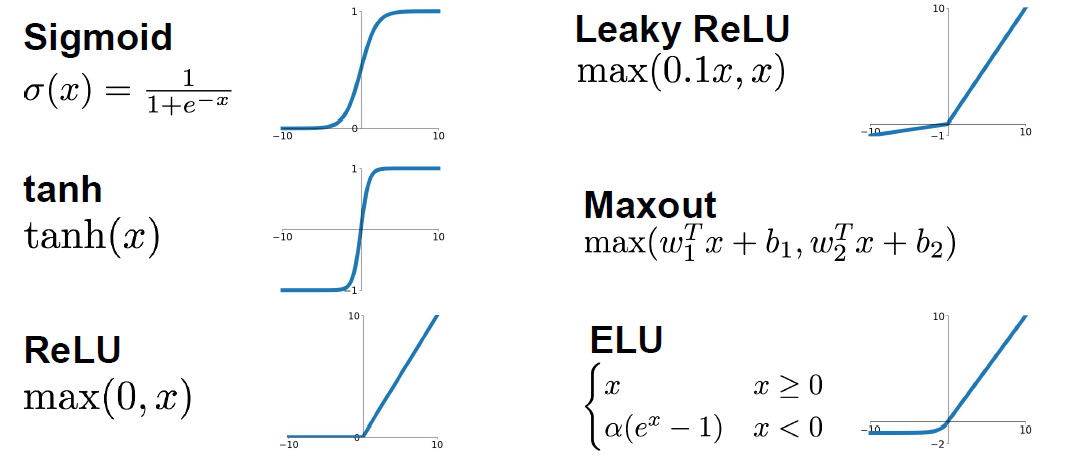

Activation functions:

Different choices for activation function includes Sigmoid, tanh, RELU, Leaky RELU, Maxout, and ELU.

Sigmoid:

Squashes the numbers between [0,1]

Used as a firing rate like human brains.

Sigmoid(x) = 1 / (1 + e^-x)Problems with sigmoid:

big values neurons kill the gradients.

Gradients are in most cases near 0 (Big values/small values), that kills the updates if the graph/network are large.

Not Zero-centered.

Didn't produce zero-mean data.

exp()is a bit compute expensive.just to mention. We have a more complex operations in deep learning like convolution.

Tanh:

Squashes the numbers between [-1,1]

Zero centered.

Still big values neurons "kill" the gradients.

Tanh(x)is the equation.Proposed by Yann Lecun in 1991.

RELU (Rectified linear unit):

RELU(x) = max(0,x)Doesn't kill the gradients.

Only small values that are killed. Killed the gradient in the half

Computationally efficient.

Converges much faster than Sigmoid and Tanh

(6x)More biologically plausible than sigmoid.

Proposed by Alex Krizhevsky in 2012 Toronto university. (AlexNet)

Problems:

Not zero centered.

If weights aren't initialized good, maybe 75% of the neurons will be dead and thats a waste computation. But its still works. This is an active area of research to optimize this.

To solve the issue mentioned above, people might initialize all the biases by 0.01

Leaky RELU:

leaky_RELU(x) = max(0.01x,x)Doesn't kill the gradients from both sides.

Computationally efficient.

Converges much faster than Sigmoid and Tanh (6x)

Will not die.

PRELU is placing the 0.01 by a variable alpha which is learned as a parameter.

Exponential linear units (ELU):

It has all the benefits of RELU

Closer to zero mean outputs and adds some robustness to noise.

problems

exp()is a bit compute expensive.

Maxout activations:

maxout(x) = max(w1.T*x + b1, w2.T*x + b2)Generalizes RELU and Leaky RELU

Doesn't die!

Problems:

oubles the number of parameters per neuron

In practice:

Use RELU. Be careful for your learning rates.

Try out Leaky RELU/Maxout/ELU

Try out tanh but don't expect much.

Don't use sigmoid!

Data preprocessing:

Normalize the data:

To normalize images:

Subtract the mean image (E.g. Alexnet)

Mean image shape is the same as the input images.

Or Subtract per-channel mean

Means calculate the mean for each channel of all images. Shape is 3 (3 channels)

Weight initialization:

What happened when initialize all Ws with zeros?

All the neurons will do exactly the same thing. They will have the same gradient and they will have the same update.

So if W's of a specific layer is equal the thing described happened

First idea is to initialize the w's with small random numbers:

The standard deviations is going to zero in deeper networks. and the gradient will vanish sooner in deep networks.

The network will explode with big numbers!

Xavier initialization:

It works because we want the variance of the input to be as the variance of the output.

But it has an issue, It breaks when you are using RELU.

He initialization (Solution for the RELU issue):

Solves the issue with RELU. Its recommended when you are using RELU

Proper initialization is an active area of research.

Batch normalization:

is a technique to provide any layer in a Neural Network with inputs that are zero mean/unit variance.

It speeds up the training. You want to do this a lot.

Made by Sergey Ioffe and Christian Szegedy at 2015.

We make a Gaussian activations in each layer. by calculating the mean and the variance.

Usually inserted after (fully connected or Convolutional layers) and (before nonlinearity).

Steps (For each output of a layer) 5. First we compute the mean and variance^2 of the batch for each feature. 6. We normalize by subtracting the mean and dividing by square root of (variance^2 + epsilon)

epsilon to not divide by zero

Then we make a scale and shift variables:

Result = gamma * normalizedX + betagamma and beta are learnable parameters.

it basically possible to say “Hey!! I don’t want zero mean/unit variance input, give me back the raw input - it’s better for me.”

Hey shift and scale by what you want not just the mean and variance!

The algorithm makes each layer flexible (It chooses which distribution it wants)

We initialize the BatchNorm Parameters to transform the input to zero mean/unit variance distributions but during training they can learn that any other distribution might be better.

During the running of the training we need to calculate the globalMean and globalVariance for each layer by using weighted average.

Benefits of Batch Normalization:

Networks train faster.

Allows higher learning rates.

helps reduce the sensitivity to the initial starting weights.

Makes more activation functions viable.

Provides some regularization.

Because we are calculating mean and variance for each batch that gives a slight regularization effect.

In conv layers, we will have one variance and one mean per activation map.

Batch normalization have worked best for CONV and regular deep NN, But for recurrent NN and reinforcement learning its still an active research area.

Its challengey in reinforcement learning because the batch is small.

Baby sitting the learning process

Preprocessing of data.

Choose the architecture.

Make a forward pass and check the loss (Disable regularization). Check if the loss is reasonable.

Add regularization, the loss should go up!

Disable the regularization again and take a small number of data and try to train the loss and reach zero loss.

You should overfit perfectly for small datasets.

Take your full training data, and small regularization then try some value of learning rate.

If loss is barely changing, then the learning rate is small.

If you got

NANthen your NN exploded and your learning rate is high.Get your learning rate range by trying the min value (That can change) and the max value that doesn't explode the network.

Do Hyperparameters optimization to get the best hyperparameters values.

Hyperparameter Optimization

Try Cross validation strategy.

Run with a few ephocs, and try to optimize the ranges.

Its best to optimize in log space.

Adjust your ranges and try again.

Its better to try random search instead of grid searches (In log space)

07. Training neural networks II

Optimization algorithms:

Problems with stochastic gradient descent:

if loss quickly in one direction and slowly in another (For only two variables), you will get very slow progress along shallow dimension, jitter along steep direction. Our NN will have a lot of parameters then the problem will be more.

Local minimum or saddle points

If SGD went into local minimum we will stuck at this point because the gradient is zero.

Also in saddle points the gradient will be zero so we will stuck.

Saddle points says that at some point:

Some gradients will get the loss up.

Some gradients will get the loss down.

And that happens more in high dimensional (100 million dimension for example)

The problem of deep NN is more about saddle points than about local minimum because deep NN has high dimensions (Parameters)

Mini batches are noisy because the gradient is not taken for the whole batch.

SGD + momentum:

Build up velocity as a running mean of gradients:

V[0]is zero.Solves the saddle point and local minimum problems.

It overshoots the problem and returns to it back.

Nestrov momentum:

Doesn't overshoot the problem but slower than SGD + momentum

AdaGrad

RMSProp

People uses this instead of AdaGrad

Adam

Calculates the momentum and RMSProp as the gradients.

It need a Fixing bias to fix starts of gradients.

Is the best technique so far runs best on a lot of problems.

With

beta1 = 0.9andbeta2 = 0.999andlearning_rate = 1e-3or5e-4is a great starting point for many models!

Learning decay

Ex. decay learning rate by half every few epochs.

To help the learning rate not to bounce out.

Learning decay is common with SGD+momentum but not common with Adam.

Dont use learning decay from the start at choosing your hyperparameters. Try first and check if you need decay or not.

All the above algorithms we have discussed is a first order optimization.

Second order optimization

Use gradient and Hessian to from quadratic approximation.

Step to the minima of the approximation.

What is nice about this update?

It doesn't has a learning rate in some of the versions.

But its unpractical for deep learning

Has O(N^2) elements.

Inverting takes O(N^3).

L-BFGS is a version of second order optimization

Works with batch optimization but not with mini-batches.

In practice first use ADAM and if it didn't work try L-BFGS.

Some says all the famous deep architectures uses SGS + Nestrov momentum

Regularization

So far we have talked about reducing the training error, but we care about most is how our model will handle unseen data!

What if the gab of the error between training data and validation data are too large?

This error is called high variance.

Model Ensembles:

Algorithm:

Train multiple independent models of the same architecture with different initializations.

At test time average their results.

It can get you extra 2% performance.

It reduces the generalization error.

You can use some snapshots of your NN at the training ensembles them and take the results.

Regularization solves the high variance problem. We have talked about L1, L2 Regularization.

Some Regularization techniques are designed for only NN and can do better.

Drop out:

In each forward pass, randomly set some of the neurons to zero. Probability of dropping is a hyperparameter that are 0.5 for almost cases.

So you will chooses some activation and makes them zero.

It works because:

It forces the network to have redundant representation; prevent co-adaption of features!

If you think about this, It ensemble some of the models in the same model!

At test time we might multiply each dropout layer by the probability of the dropout.

Sometimes at test time we don't multiply anything and leave it as it is.

With drop out it takes more time to train.

Data augmentation:

Another technique that makes Regularization.

Change the data!

For example flip the image, or rotate it.

Example in ResNet:

Training: Sample random crops and scales:

Pick random L in range [256,480]

Resize training image, short side = L

Sample random 224x244 patch.

Testing: average a fixed set of crops 4. Resize image at 5 scales: {224, 256, 384, 480, 640} 5. For each size, use 10 224x224 crops: 4 corners + center + flips

Apply Color jitter or PCA

Translation, rotation, stretching.

Drop connect

Like drop out idea it makes a regularization.

Instead of dropping the activation, we randomly zeroing the weights.

Fractional Max Pooling

Cool regularization idea. Not commonly used.

Randomize the regions in which we pool.

Stochastic depth

New idea.

Eliminate layers, instead on neurons.

Has the similar effect of drop out but its a new idea.

Transfer learning:

Some times your data is overfitted by your model because the data is small not because of regularization.

You need a lot of data if you want to train/use CNNs.

Steps of transfer learning

Train on a big dataset that has common features with your dataset. Called pretraining.

Freeze the layers except the last layer and feed your small dataset to learn only the last layer.

Not only the last layer maybe trained again, you can fine tune any number of layers you want based on the number of data you have

Guide to use transfer learning:

- Very Similar datasetvery different dataset

very little dataset

Use Linear classifier on top layer

You're in trouble.. Try linear classifier from different stages

quite a lot of data

Finetune a few layers

Finetune a large layers

Transfer learning is the normal not an exception.

08. Deep learning software

This section changes a lot every year in CS231n due to rabid changes in the deep learning softwares.

CPU vs GPU

GPU The graphics card was developed to render graphics to play games or make 3D media,. etc.

NVIDIA vs AMD

Deep learning choose NVIDIA over AMD GPU because NVIDIA is pushing research forward deep learning also makes it architecture more suitable for deep learning.

CPU has fewer cores but each core is much faster and much more capable; great at sequential tasks. While GPUs has more cores but each core is much slower "dumber"; great for parallel tasks.

GPU cores needs to work together. and has its own memory.

Matrix multiplication is from the operations that are suited for GPUs. It has MxN independent operations that can be done on parallel.

Convolution operation also can be paralyzed because it has independent operations.

Programming GPUs frameworks:

CUDA (NVIDIA only)

Write c-like code that runs directly on the GPU.

Its hard to build a good optimized code that runs on GPU. Thats why they provided high level APIs.

Higher level APIs: cuBLAS, cuDNN, etc

CuDNN has implemented back prop. , convolution, recurrent and a lot more for you!

In practice you won't write a parallel code. You will use the code implemented and optimized by others!

OpenCl

Similar to CUDA, but runs on any GPU.

Usually Slower .

Haven't much support yet from all deep learning softwares.

There are a lot of courses for learning parallel programming.

If you aren't careful, training can bottleneck on reading dara and transferring to GPU. So the solutions are:

Read all the data into RAM. # If possible

Use SSD instead of HDD

Use multiple CPU threads to prefetch data!

While the GPU are computing, a CPU thread will fetch the data for you.

A lot of frameworks implemented that for you because its a little bit painful!

Deep learning Frameworks

Its super fast moving!

Currently available frameworks:

Tensorflow (Google)

Caffe (UC Berkeley)

Caffe2 (Facebook)

Torch (NYU / Facebook)

PyTorch (Facebook)

Theano (U monteral)

Paddle (Baidu)

CNTK (Microsoft)

MXNet (Amazon)

The instructor thinks that you should focus on Tensorflow and PyTorch.

The point of deep learning frameworks:

Easily build big computational graphs.

Easily compute gradients in computational graphs.

Run it efficiently on GPU (cuDNN - cuBLAS)

Numpy doesn't run on GPU.

Most of the frameworks tries to be like NUMPY in the forward pass and then they compute the gradients for you.

Tensorflow (Google)

Code are two parts:

Define computational graph.

Run the graph and reuse it many times.

Tensorflow uses a static graph architecture.

Tensorflow variables live in the graph. while the placeholders are feed each run.

Global initializer function initializes the variables that lives in the graph.

Use predefined optimizers and losses.

You can make a full layers with layers.dense function.

Keras (High level wrapper):

Keras is a layer on top pf Tensorflow, makes common things easy to do.

So popular!

Trains a full deep NN in a few lines of codes.

There are a lot high level wrappers:

Keras

TFLearn

TensorLayer

tf.layers

#Ships with tensorflowtf-Slim

#Ships with tensorflowtf.contrib.learn

#Ships with tensorflowSonnet

# New from deep mind

Tensorflow has pretrained models that you can use while you are using transfer learning.

Tensorboard adds logging to record loss, stats. Run server and get pretty graphs!

It has distributed code if you want to split your graph on some nodes.

Tensorflow is actually inspired from Theano. It has the same inspirations and structure.

PyTorch (Facebook)

Has three layers of abstraction:

Tensor:

ndarraybut runs on GPU#Like numpy arrays in tensorflowVariable: Node in a computational graphs; stores data and gradient

#Like Tensor, Variable, Placeholders

Module: A NN layer; may store state or learnable weights

#Like tf.layers in tensorflow

In PyTorch the graphs runs in the same loop you are executing which makes it easier for debugging. This is called a dynamic graph.

In PyTorch you can define your own autograd functions by writing forward and backward for tensors. Most of the times it will implemented for you.

Torch.nn is a high level api like keras in tensorflow. You can create the models and go on and on.

You can define your own nn module!

Also Pytorch contains optimizers like tensorflow.

It contains a data loader that wraps a Dataset and provides minbatches, shuffling and multithreading.

PyTorch contains the best and super easy to use pretrained models

PyTorch contains Visdom that are like tensorboard. but Tensorboard seems to be more powerful.

PyTorch is new and still evolving compared to Torch. Its still in beta state.

PyTorch is best for research.

Tensorflow builds the graph once, then run them many times (Called static graph)

In each PyTorch iteration we build a new graph (Called dynamic graph)

Static vs dynamic graphs:

Optimization:

With static graphs, framework can optimize the graph for you before it runs.

Serialization

Static: Once graph is built, can serialize it and run it without the code that built the graph. Ex use the graph in c++

Dynamic: Always need to keep the code around.

Conditional

Is easier in dynamic graphs. And more complicated in static graphs.

Loops:

Is easier in dynamic graphs. And more complicated in static graphs.

Tensorflow fold make dynamic graphs easier in Tensorflow through dynamic batching.

Dynamic graph applications include: recurrent networks and recursive networks.

Caffe2 uses static graphs and can train model in python also works on IOS and Android

Tensorflow/Caffe2 are used a lot in production especially on mobile.

09. CNN architectures

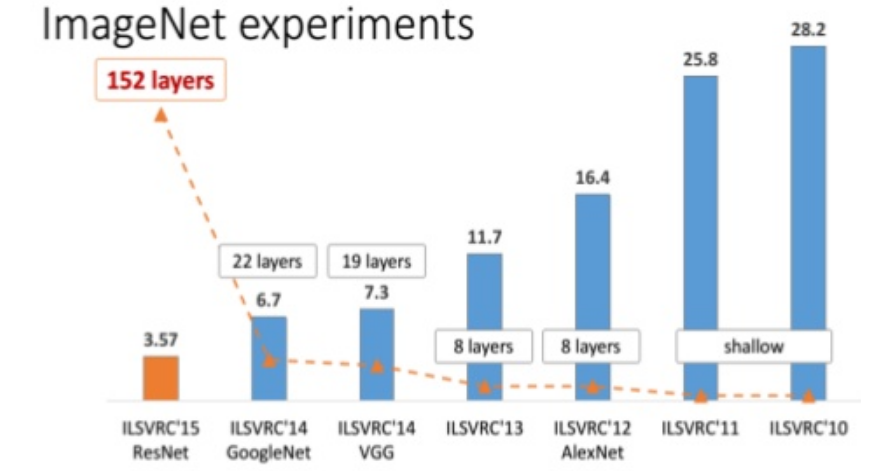

This section talks about the famous CNN architectures. Focuses on CNN architectures that won ImageNet competition since 2012.

Also we will discuss some interesting architectures as we go.

The first ConvNet that was made was LeNet-5 architectures are:by Yann Lecun at 1998.

Architecture are:

CONV-POOL-CONV-POOL-FC-FC-FCEach conv filters was

5x5applied at stride 1Each pool was

2x2applied at stride2It was useful in Digit recognition.

In particular the insight that image features are distributed across the entire image, and convolutions with learnable parameters are an effective way to extract similar features at multiple location with few parameters.

It contains exactly 5 layers

In 2010 Dan Claudiu Ciresan and Jurgen Schmidhuber published one of the very fist implementations of GPU Neural nets. This implementation had both forward and backward implemented on a a NVIDIA GTX 280 graphic processor of an up to 9 layers neural network.

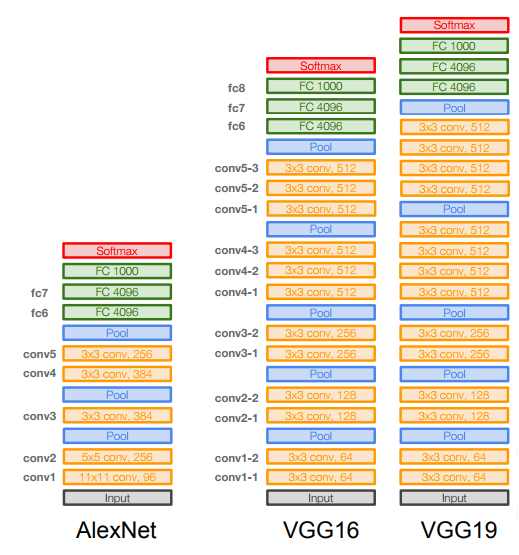

AlexNet (2012):

ConvNet that started the evolution and wins the ImageNet at 2012.

Architecture are:

CONV1-MAXPOOL1-NORM1-CONV2-MAXPOOL2-NORM2-CONV3-CONV4-CONV5-MAXPOOL3-FC6-FC7-FC8Contains exactly 8 layers the first 5 are Convolutional and the last 3 are fully connected layers.

AlexNet accuracy error was

16.4%For example if the input is 227 x 227 x3 then these are the shapes of the of the outputs at each layer:

CONV1 (96 11 x 11 filters at stride 4, pad 0)

Output shape

(55,55,96), Number of weights are(11*11*3*96)+96 = 34944

MAXPOOL1 (3 x 3 filters applied at stride 2)

Output shape

(27,27,96), No Weights

NORM1

Output shape

(27,27,96), We don't do this any more

CONV2 (256 5 x 5 filters at stride 1, pad 2)

MAXPOOL2 (3 x 3 filters at stride 2)

NORM2

CONV3 (384 3 x 3 filters ar stride 1, pad 1)

CONV4 (384 3 x 3 filters ar stride 1, pad 1)

CONV5 (256 3 x 3 filters ar stride 1, pad 1)

MAXPOOL3 (3 x 3 filters at stride 2)

Output shape

(6,6,256)

FC6 (4096)

FC7 (4096)

FC8 (1000 neurons for class score)

Some other details:

First use of RELU.

Norm layers but not used any more.

heavy data augmentation

Dropout

0.5batch size

128SGD momentum

0.9Learning rate

1e-2reduce by 10 at some iterations7 CNN ensembles!

AlexNet was trained on GTX 580 GPU with only 3 GB which wasn't enough to train in one machine so they have spread the feature maps in half. The first AlexNet was distributed!

Its still used in transfer learning in a lot of tasks.

Total number of parameters are

60 million

ZFNet (2013)

Won in 2013 with error 11.7%

It has the same general structure but they changed a little in hyperparameters to get the best output.

Also contains 8 layers.

AlexNet but:

CONV1: change from (11 x 11 stride 4) to (7 x 7 stride 2)CONV3,4,5: instead of 384, 384, 256 filters use 512, 1024, 512

OverFeat (2013)

Won the localization in imageNet in 2013

We show how a multiscale and sliding window approach can be efficiently implemented within a ConvNet. We also introduce a novel deep learning approach to localization by learning to predict object boundaries.

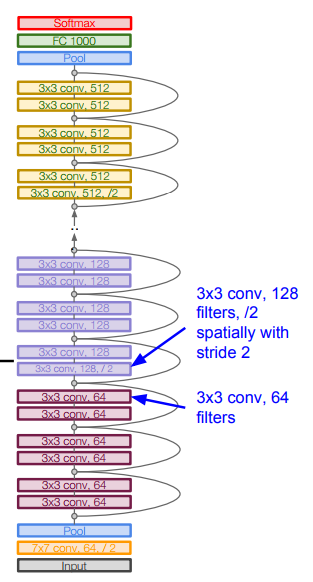

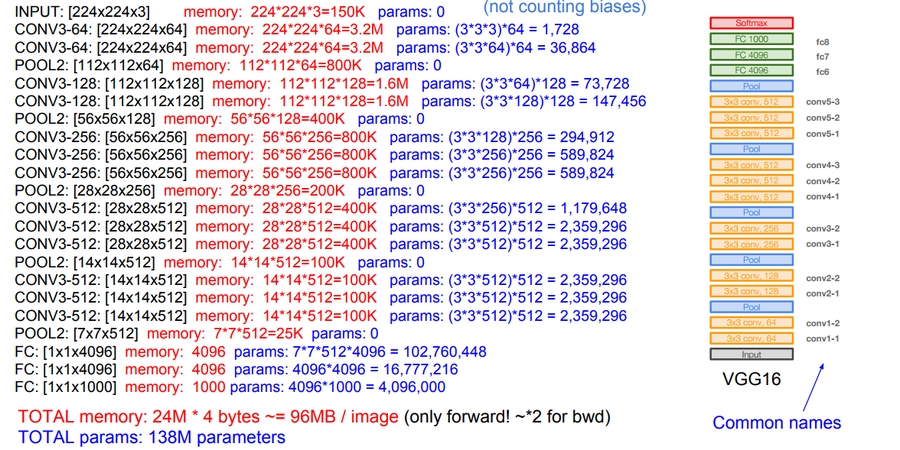

VGGNet (2014) (Oxford)

Deeper network with more layers.

Contains 19 layers.

Won on 2014 with GoogleNet with error 7.3%

Smaller filters with deeper layers.

The great advantage of VGG was the insight that multiple 3 × 3 convolution in sequence can emulate the effect of larger receptive fields, for examples 5 × 5 and 7 × 7.

Used the simple 3 x 3 Conv all through the network.

3 (3 x 3) filters has the same effect as 7 x 7

The Architecture contains several CONV layers then POOL layer over 5 times and then the full connected layers.

It has a total memory of 96MB per image for only forward propagation!

Most memory are in the earlier layers

Total number of parameters are 138 million

Most of the parameters are in the fully connected layers

Has a similar details in training like AlexNet. Like using momentum and dropout.

VGG19 are an upgrade for VGG16 that are slightly better but with more memory

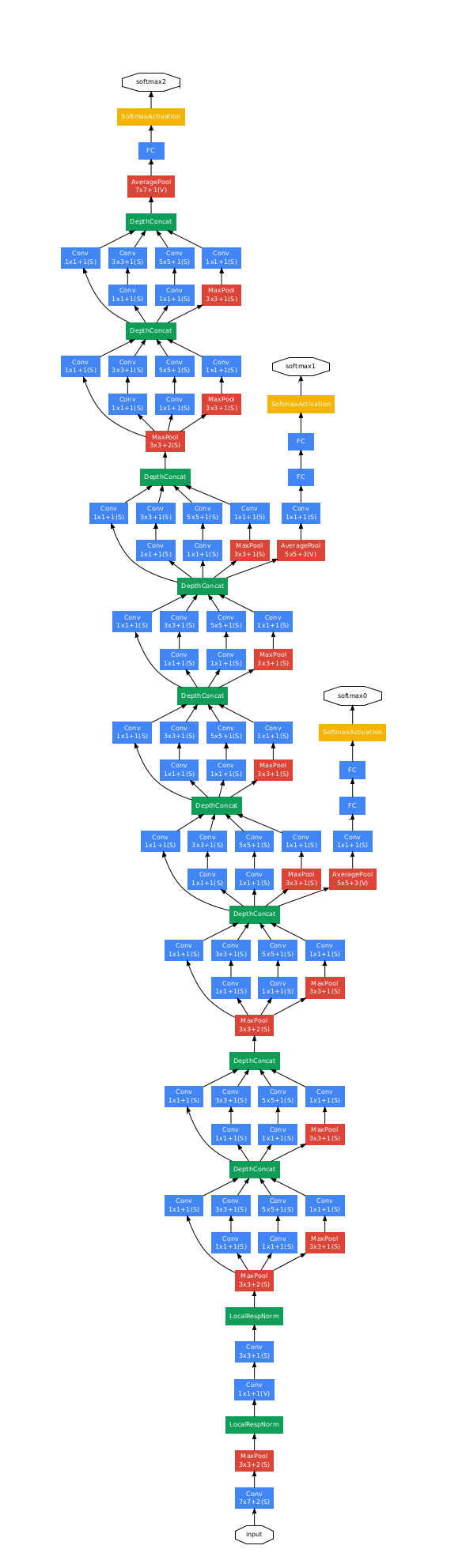

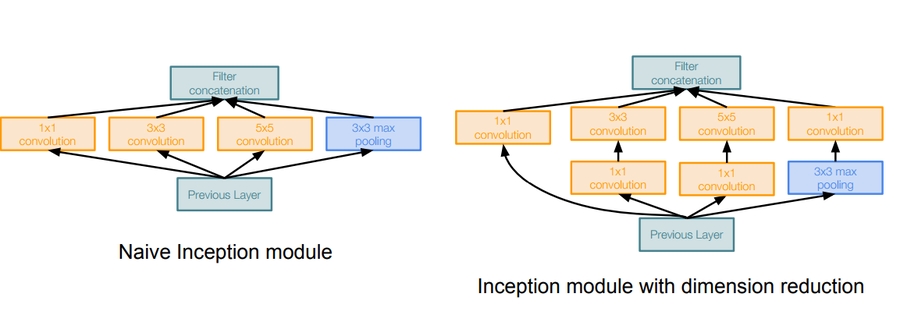

GoogleNet (2014)

Deeper network with more layers.

Contains 22 layers.

It has Efficient Inception module.

Only 5 million parameters! 12x less than AlexNet

Won on 2014 with VGGNet with error 6.7%

Inception module:

Design a good local network topology (network within a network (NiN)) and then stack these modules on top of each other.

It consists of:

Apply parallel filter operations on the input from previous layer

Multiple convs of sizes (1 x 1, 3 x 3, 5 x 5)

Adds padding to maintain the sizes.

Pooling operation. (Max Pooling)

Adds padding to maintain the sizes.

Concatenate all filter outputs together depth-wise.

For example:

Input for inception module is 28 x 28 x 256

Then the parallel filters applied:

(1 x 1), 128 filter

# output shape (28,28,128)(3 x 3), 192 filter

# output shape (28,28,192)(5 x 5), 96 filter

# output shape (28,28,96)(3 x 3) Max pooling

# output shape (28,28,256)

After concatenation this will be

(28,28,672)

By this design -We call Naiveit has a big computation complexity.

The last example will make:

[1 x 1 conv, 128] ==> 28 * 28 * 128 * 1 * 1 * 256 = 25 Million approx

[3 x 3 conv, 192] ==> 28 * 28 * 192 *3 *3 * 256 = 346 Million approx

[5 x 5 conv, 96] ==> 28 * 28 * 96 * 5 * 5 * 256 = 482 Million approx

In total around 854 Million operation!

Solution: bottleneck layers that use 1x1 convolutions to reduce feature depth.

Inspired from NiN (Network in network)

The bottleneck solution will make a total operations of 358M on this example which is good compared with the naive implementation.

So GoogleNet stacks this Inception module multiple times to get a full architecture of a network that can solve a problem without the Fully connected layers.

Just to mention, it uses an average pooling layer at the end before the classification step.

Full architecture:

In February 2015 Batch-normalized Inception was introduced as Inception V2. Batch-normalization computes the mean and standard-deviation of all feature maps at the output of a layer, and normalizes their responses with these values.

In December 2015 they introduced a paper "Rethinking the Inception Architecture for Computer Vision" which explains the older inception models well also introducing a new version V3.

The first GoogleNet and VGG was before batch normalization invented so they had some hacks to train the NN and converge well.

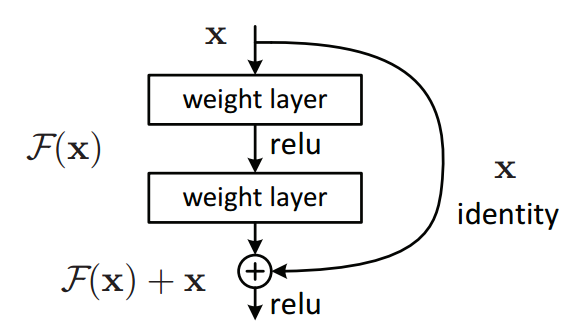

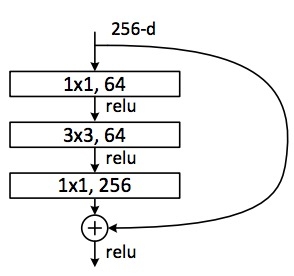

ResNet (2015) (Microsoft Research)

152-layer model for ImageNet. Winner by 3.57% which is more than human level error.

This is also the very first time that a network of > hundred, even 1000 layers was trained.

Swept all classification and detection competitions in ILSVRC’15 and COCO’15!

What happens when we continue stacking deeper layers on a “plain” Convolutional neural network?

The deeper model performs worse, but it’s not caused by overfitting!

The learning stops performs well somehow because deeper NN are harder to optimize!

The deeper model should be able to perform at least as well as the shallower model.

A solution by construction is copying the learned layers from the shallower model and setting additional layers to identity mapping.

Residual block:

Microsoft came with the Residual block which has this architecture:

Say you have a network till a depth of N layers. You only want to add a new layer if you get something extra out of adding that layer.

One way to ensure this new (N+1)th layer learns something new about your network is to also provide the input(x) without any transformation to the output of the (N+1)th layer. This essentially drives the new layer to learn something different from what the input has already encoded.

The other advantage is such connections help in handling the Vanishing gradient problem in very deep networks.

With the Residual block we can now have a deep NN of any depth without the fearing that we can't optimize the network.

ResNet with a large number of layers started to use a bottleneck layer similar to the Inception bottleneck to reduce the dimensions.

Full ResNet architecture:

Stack residual blocks.

Every residual block has two 3 x 3 conv layers.

Additional conv layer at the beginning.

No FC layers at the end (only FC 1000 to output classes)

Periodically, double number of filters and downsample spatially using stride 2 (/2 in each dimension)

Training ResNet in practice:

Batch Normalization after every CONV layer.

Xavier/2 initialization from He et al.

SGD + Momentum (

0.9)Learning rate: 0.1, divided by 10 when validation error plateaus

Mini-batch size

256Weight decay of

1e-5No dropout used.

Inception-v4: Resnet + Inception and was founded in 2016.

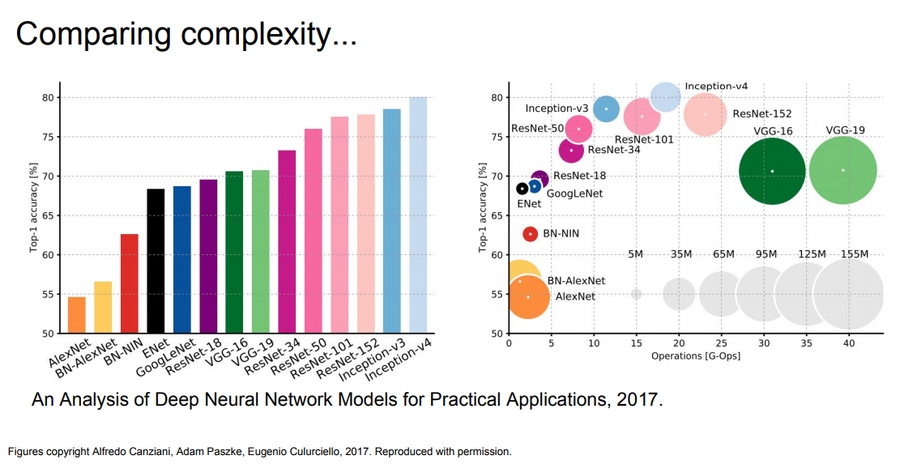

The complexity comparing over all the architectures:

VGG: Highest memory, most operations.

GoogLeNet: most efficient.

ResNets Improvements:

(2016) Identity Mappings in Deep Residual Networks

From the creators of ResNet.

Gives better performance.

(2016) Wide Residual Networks

Argues that residuals are the important factor, not depth

50-layer wide ResNet outperforms 152-layer original ResNet

Increasing width instead of depth more computationally efficient (parallelizable)

(2016) Deep Networks with Stochastic Depth

Motivation: reduce vanishing gradients and training time through short networks during training.

Randomly drop a subset of layers during each training pass

Use full deep network at test time.

Beyond ResNets:

(2017) FractalNet: Ultra-Deep Neural Networks without Residuals

Argues that key is transitioning effectively from shallow to deep and residual representations are not necessary.

Trained with dropping out sub-paths

Full network at test time.

(2017) Densely Connected Convolutional Networks

(2017) SqueezeNet: AlexNet-level Accuracy With 50x Fewer Parameters and <0.5Mb Model Size

Good for production.

It is a re-hash of many concepts from ResNet and Inception, and show that after all, a better design of architecture will deliver small network sizes and parameters without needing complex compression algorithms.

Conclusion:

ResNet current best default.

Trend towards extremely deep networks

In the last couple of years, some models all using the shortcuts like "ResNet" to eaisly flow the gradients.

10. Recurrent Neural networks

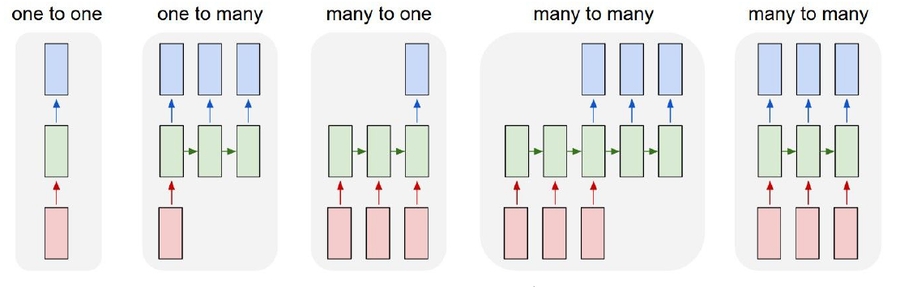

Vanilla Neural Networks "Feed neural networks", input of fixed size goes through some hidden units and then go to output. We call it a one to one network.

Recurrent Neural Networks RNN Models:

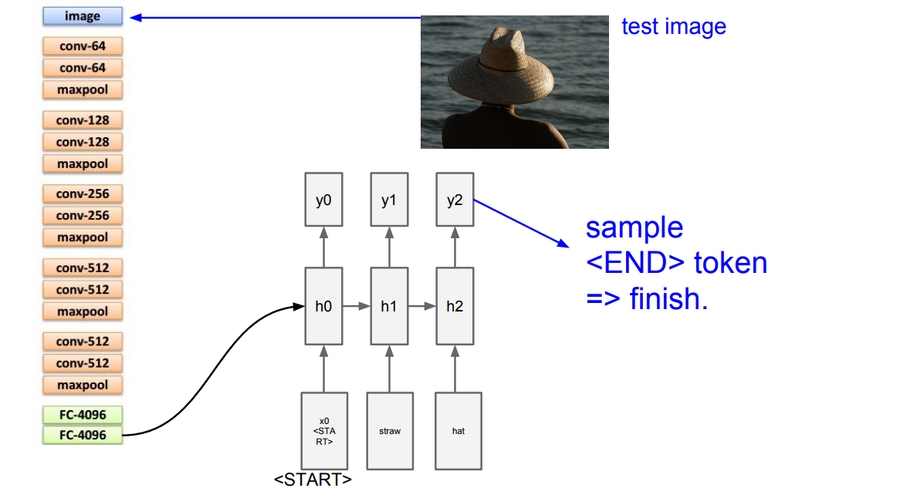

One to many

Example: Image Captioning

image ==> sequence of words

Many to One

Example: Sentiment Classification

sequence of words ==> sentiment

Many to many

Example: Machine Translation

seq of words in one language ==> seq of words in another language

Example: Video classification on frame level

RNNs can also work for Non-Sequence Data (One to One problems)

It worked in Digit classification through taking a series of “glimpses”

“Multiple Object Recognition with Visual Attention”, ICLR 2015.

It worked on generating images one piece at a time

i.e generating a captcha

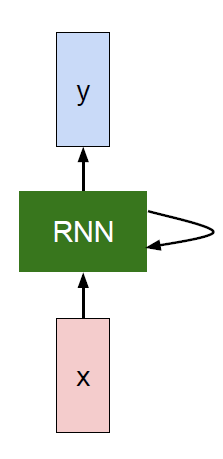

So what is a recurrent neural network?

Recurrent core cell that take an input x and that cell has an internal state that are updated each time it reads an input.

The RNN block should return a vector.

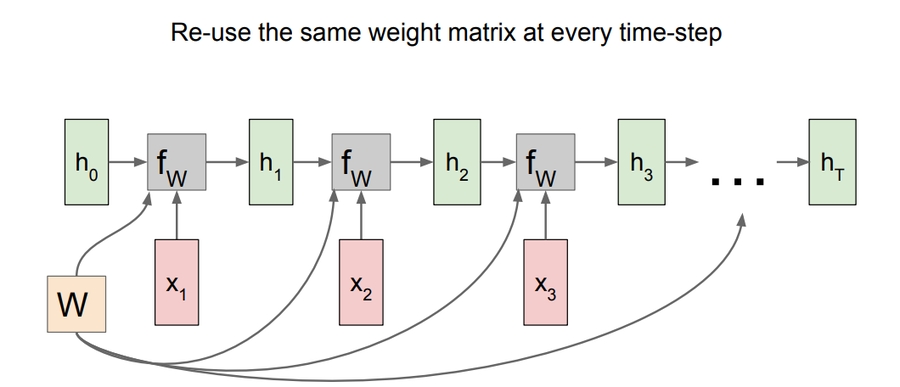

We can process a sequence of vectors x by applying a recurrence formula at every time step:

The same function and the same set of parameters are used at every time step.

(Vanilla) Recurrent Neural Network:

This is the simplest example of a RNN.

RNN works on a sequence of related data.

Recurrent NN Computational graph:

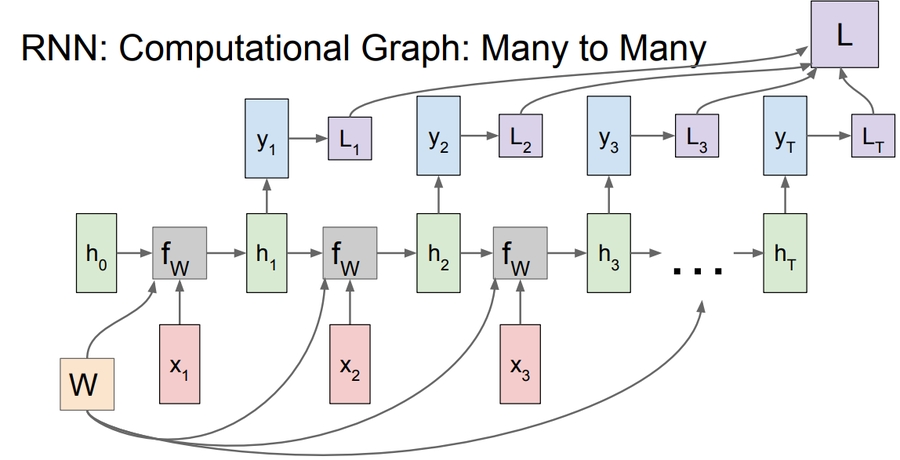

h0are initialized to zero.Gradient of

Wis the sum of all theWgradients that has been calculated!A many to many graph:

Also the last is the sum of all losses and the weights of Y is one and is updated through summing all the gradients!

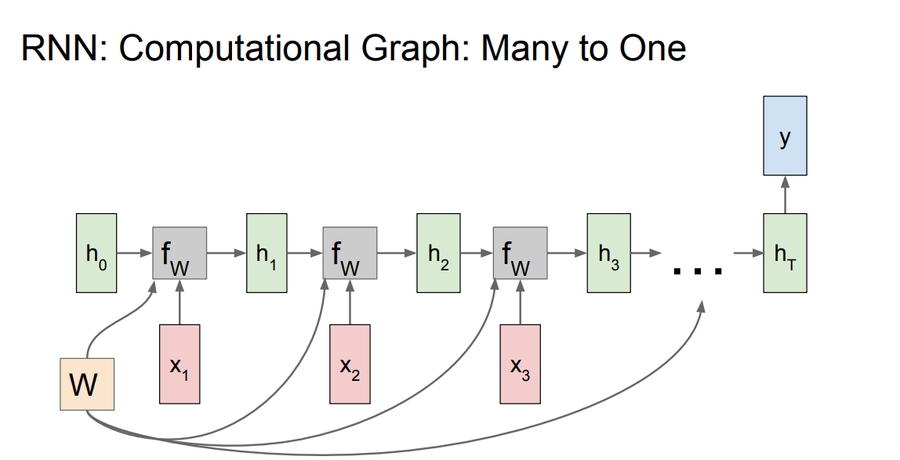

A many to one graph:

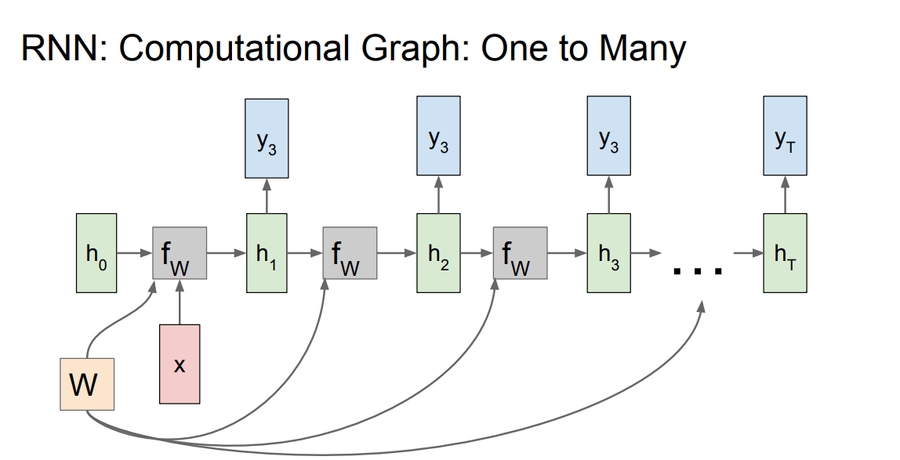

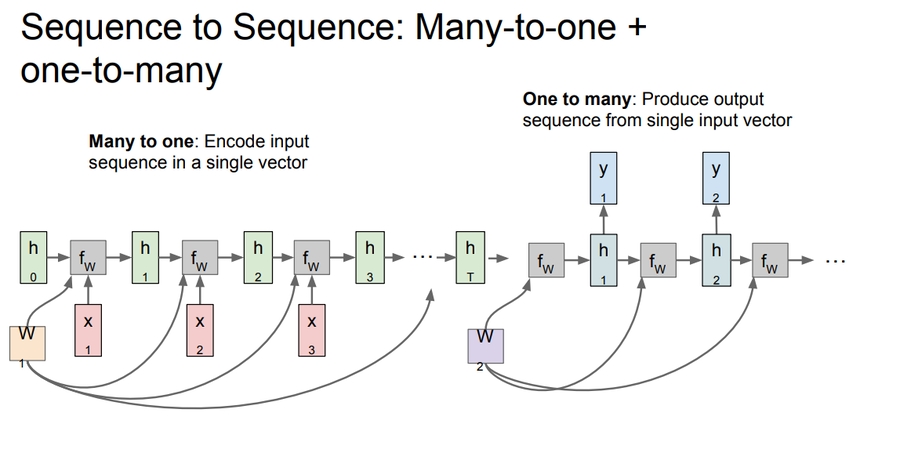

A one to many graph:

sequence to sequence graph:

Encoder and decoder philosophy.

Examples:

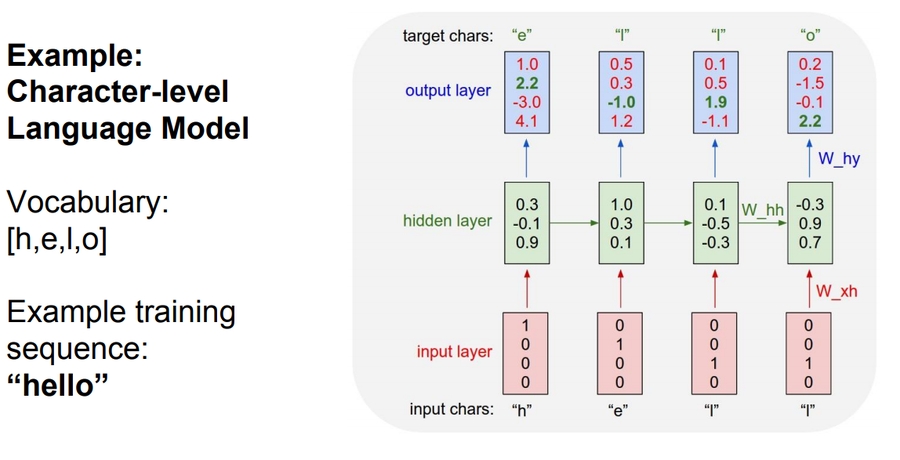

Suppose we are building words using characters. We want a model to predict the next character of a sequence. Lets say that the characters are only

[h, e, l, o]and the words are [hello]Training:

Only the third prediction here is true. The loss needs to be optimized.

We can train the network by feeding the whole word(s).

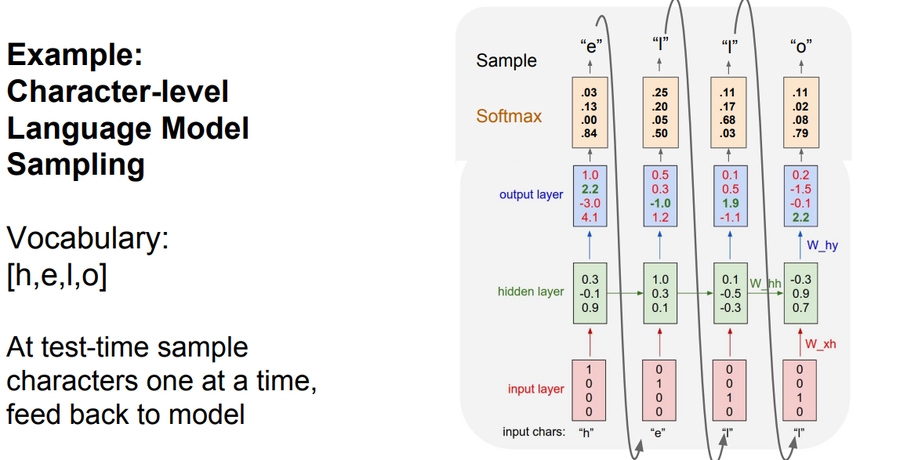

Testing time:

At test time we work with a character by character. The output character will be the next input with the other saved hidden activations.

This link contains all the code but uses Truncated Backpropagation through time as we will discuss.

Backpropagation through time Forward through entire sequence to compute loss, then backward through entire sequence to compute gradient.

But if we choose the whole sequence it will be so slow and take so much memory and will never converge!

So in practice people are doing "Truncated Backpropagation through time" as we go on we Run forward and backward through chunks of the sequence instead of whole sequence

Then Carry hidden states forward in time forever, but only backpropagate for some smaller number of steps.

Example on image captioning:

They use token to finish running.

The biggest dataset for image captioning is Microsoft COCO.

Image Captioning with Attention is a project in which when the RNN is generating captions, it looks at a specific part of the image not the whole image.

Image Captioning with Attention technique is also used in "Visual Question Answering" problem

Multilayer RNNs is generally using some layers as the hidden layer that are feed into again. LSTM is a multilayer RNNs.

Backward flow of gradients in RNN can explode or vanish. Exploding is controlled with gradient clipping. Vanishing is controlled with additive interactions (LSTM)

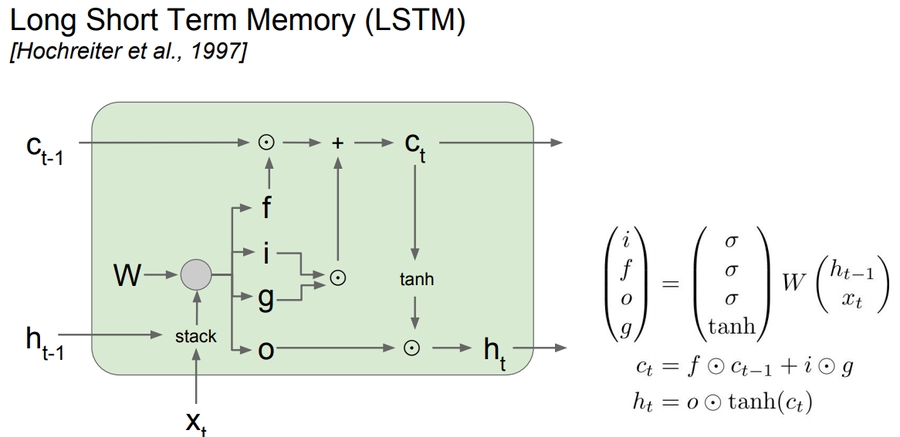

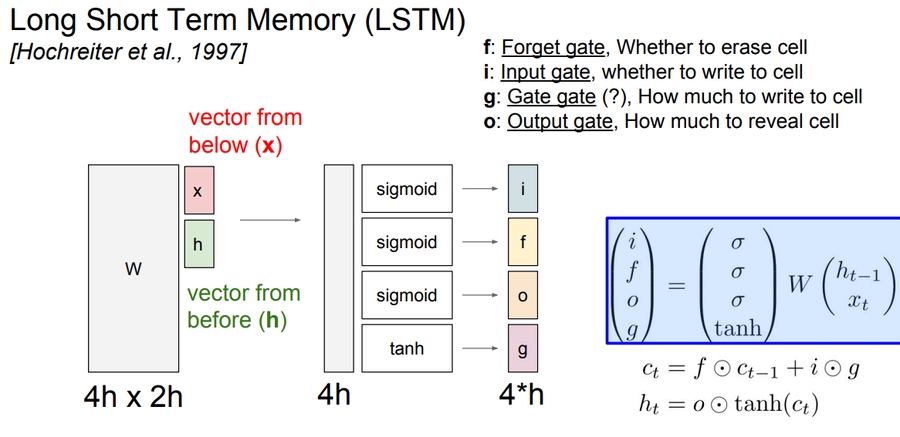

LSTM stands for Long Short Term Memory. It was designed to help the vanishing gradient problem on RNNs.

It consists of:

f: Forget gate, Whether to erase cell

i: Input gate, whether to write to cell

g: Gate gate (?), How much to write to cell

o: Output gate, How much to reveal cell

The LSTM gradients are easily computed like ResNet

The LSTM is keeping data on the long or short memory as it trains means it can remember not just the things from last layer but layers.

Highway networks is something between ResNet and LSTM that is still in research.

Better/simpler architectures are a hot topic of current research

Better understanding (both theoretical and empirical) is needed.

RNN is used for problems that uses sequences of related inputs more. Like NLP and Speech recognition.

11. Detection and Segmentation

So far we are talking about image classification problem. In this section we will talk about Segmentation, Localization, Detection.

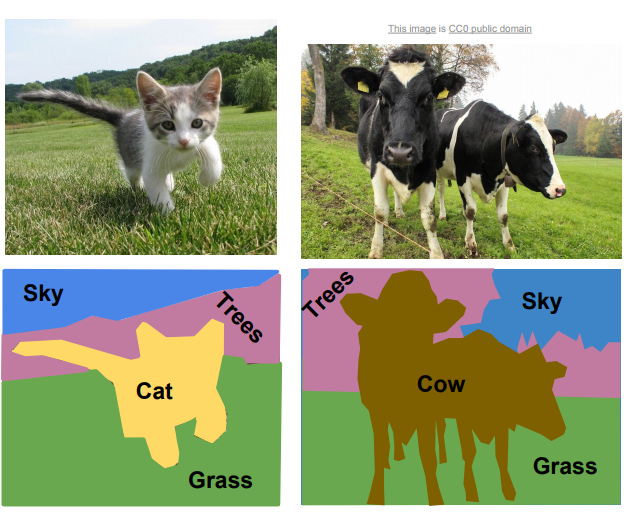

Semantic Segmentation

We want to Label each pixel in the image with a category label.

As you see the cows in the image, Semantic Segmentation Don’t differentiate instances, only care about pixels.

The first idea is to use a sliding window. We take a small window size and slide it all over the picture. For each window we want to label the center pixel.

It will work but its not a good idea because it will be computational expensive!

Very inefficient! Not reusing shared features between overlapping patches.

In practice nobody uses this.

The second idea is designing a network as a bunch of Convolutional layers to make predictions for pixels all at once!

Input is the whole image. Output is the image with each pixel labeled.

We need a lot of labeled data. And its very expensive data.

It needs a deep Conv. layers.

The loss is cross entropy between each pixel provided.

Data augmentation are good here.

The problem with this implementation that convolutions at original image resolution will be very expensive.

So in practice we don't see something like this right now.

The third idea is based on the last idea. The difference is that we are downsampling and upsampling inside the network.

We downsample because using the whole image as it is very expensive. So we go on multiple layers downsampling and then upsampling in the end.

Downsampling is an operation like Pooling and strided convolution.

Upsampling is like "Nearest Neighbor" or "Bed of Nails" or "Max unpooling"

Nearest Neighbor example:

Classification + Localization:

In this problem we want to classify the main object in the image and its location as a rectangle.

We assume there are one object.

We will create a multi task NN. The architecture are as following:

Convolution network layers connected to:

FC layers that classify the object.

# The plain classification problem we knowFC layers that connects to a four numbers

(x,y,w,h)We treat Localization as a regression problem.

This problem will have two losses:

Softmax loss for classification

Regression (Linear loss) for the localization (L2 loss)

Loss = SoftmaxLoss + L2 loss

Often the first Conv layers are pretrained NNs like AlexNet!

This technique can be used in so many other problems like: Human Pose Estimation.

Object Detection

A core idea of computer vision. We will talk by details in this problem.

The difference between "Classification + Localization" and this problem is that here we want to detect one or mode different objects and its locations!

First idea is to use a sliding window

Worked well and long time.

The steps are:

Apply a CNN to many different crops of the image, CNN classifies each crop as object or background.

The problem is we need to apply CNN to huge number of locations and scales, very computationally expensive!

The brute force sliding window will make us take thousands of thousands of time.

Region Proposals will help us deciding which region we should run our NN at:

Find blobby image regions that are likely to contain objects.

Relatively fast to run; e.g. Selective Search gives 1000 region proposals in a few seconds on CPU

So now we can apply one of the Region proposals networks and then apply the first idea.

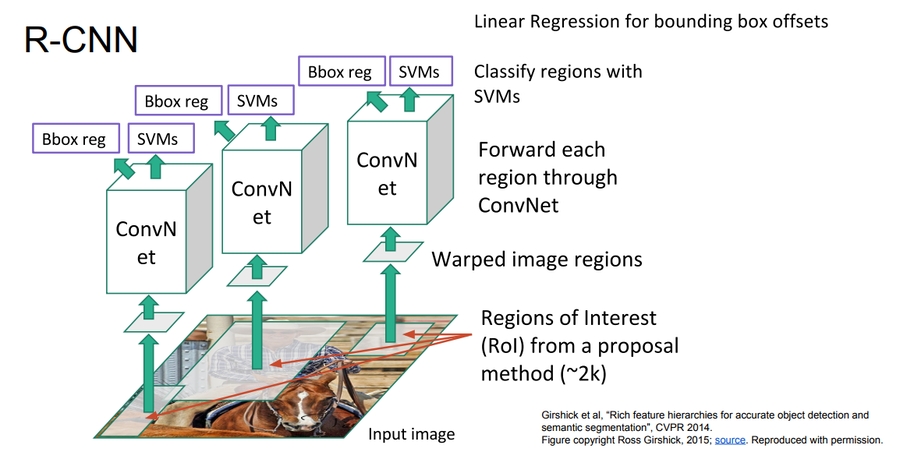

There is another idea which is called R-CNN

The idea is bad because its taking parts of the image -With Region Proposalsif different sizes and feed it to CNN after scaling them all to one size. Scaling is bad

Also its very slow.

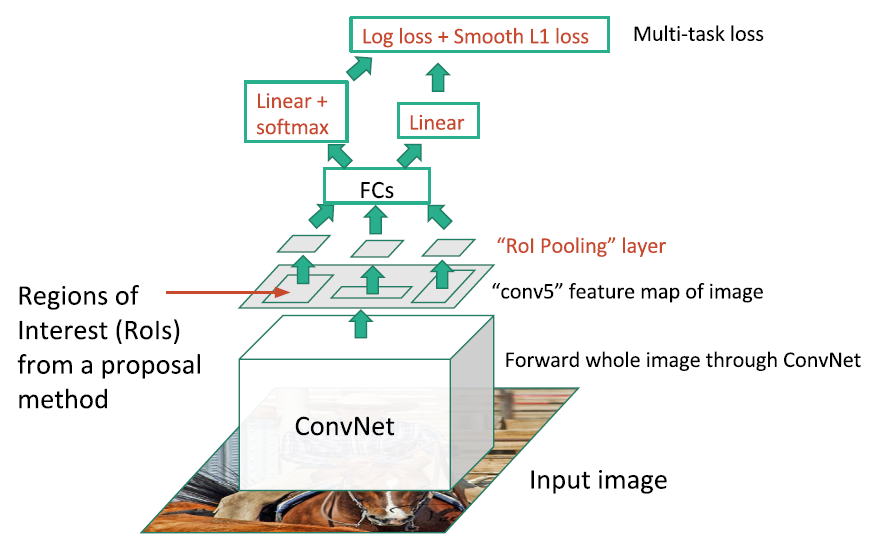

Fast R-CNN is another idea that developed on R-CNN

It uses one CNN to do everything.

Faster R-CNN does its own region proposals by Inserting Region Proposal Network (RPN) to predict proposals from features.

The fastest of the R-CNNs.

Another idea is Detection without Proposals: YOLO / SSD

YOLO stands for you only look once.

YOLO/SDD is two separate algorithms.

Faster but not as accurate.

Takeaways

Faster R-CNN is slower but more accurate.

SSD/YOLO is much faster but not as accurate.

Denese Captioning

Denese Captioning is "Object Detection + Captioning"

Paper that covers this idea can be found here.

Instance Segmentation

This is like the full problem.

Rather than we want to predict the bounding box, we want to know which pixel label but also distinguish them.

There are a lot of ideas.

There are a new idea "Mask R-CNN"

Like R-CNN but inside it we apply the Semantic Segmentation

There are a lot of good results out of this paper.

It sums all the things that we have discussed in this lecture.

Performance of this seems good.

12. Visualizing and Understanding

We want to know what’s going on inside ConvNets?

People want to trust the black box (CNN) and know how it exactly works and give and good decisions.

A first approach is to visualize filters of the first layer.

Maybe the shape of the first layer filter is 5 x 5 x 3, and the number of filters are 16. Then we will have 16 different "colored" filter images.

It turns out that these filters learns primitive shapes and oriented edges like the human brain does.

These filters really looks the same on each Conv net you will train, Ex if you tried to get it out of AlexNet, VGG, GoogleNet, or ResNet.

This will tell you what is the first convolution layer is looking for in the image.

We can visualize filters from the next layers but they won't tell us anything.

Maybe the shape of the first layer filter is 5 x 5 x 20, and the number of filters are 16. Then we will have 16*20 different "gray" filter images.

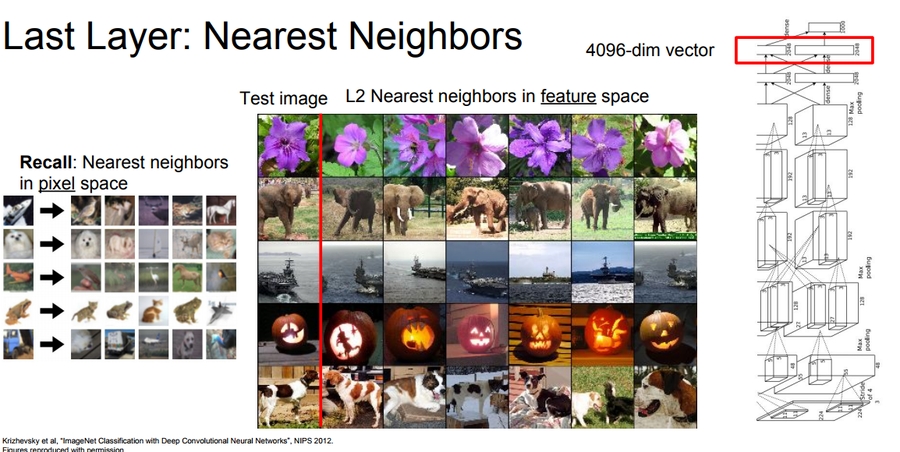

In AlexNet, there was some FC layers in the end. If we took the 4096-dimensional feature vector for an image, and collecting these feature vectors.

If we made a nearest neighbors between these feature vectors and get the real images of these features we will get something very good compared with running the KNN on the images directly!

This similarity tells us that these CNNs are really getting the semantic meaning of these images instead of on the pixels level!

We can make a dimensionality reduction on the 4096 dimensional feature and compress it to 2 dimensions.

This can be made by PCA, or t-SNE.

t-SNE are used more with deep learning to visualize the data. Example can be found here.

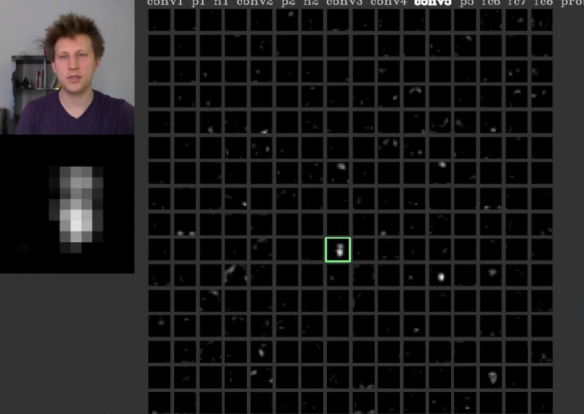

We can Visualize the activation maps.

For example if CONV5 feature map is 128 x 13 x 13, We can visualize it as 128 13 x 13 gray-scale images.

One of these features are activated corresponding to the input, so now we know that this particular map are looking for something.

Its done by Yosinski et. More info are here.

There are something called Maximally Activating Patches that can help us visualize the intermediate features in Convnets

The steps of doing this is as following:

We choose a layer then a neuron

Ex. We choose Conv5 in AlexNet which is 128 x 13 x 13 then pick channel (Neuron) 17/128

Run many images through the network, record values of chosen channel.

Visualize image patches that correspond to maximal activations.

We will find that each neuron is looking into a specific part of the image.

Extracted images are extracted using receptive field.

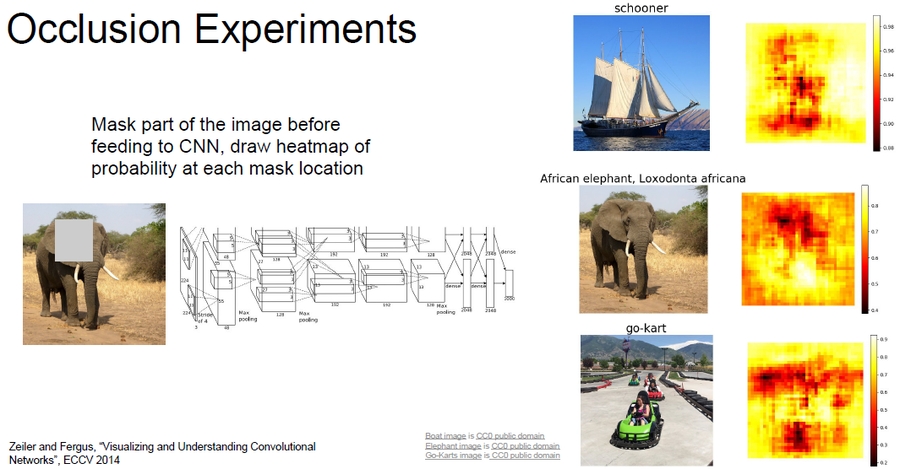

Another idea is Occlusion Experiments

We mask part of the image before feeding to CNN, draw heat-map of probability (Output is true) at each mask location

It will give you the most important parts of the image in which the Conv. Network has learned from.

Saliency Maps tells which pixels matter for classification

Like Occlusion Experiments but with a completely different approach

We Compute gradient of (unnormalized) class score with respect to image pixels, take absolute value and max over RGB channels. It will get us a gray image that represents the most important areas in the image.

This can be used for Semantic Segmentation sometimes.

(guided) backprop Makes something like Maximally Activating Patches but unlike it gets the pixels in which we are caring of.

In this technique choose a channel like Maximally Activating Patches and then compute gradient of neuron value with respect to image pixels

Images come out nicer if you only backprop positive gradients through each RELU (guided backprop)

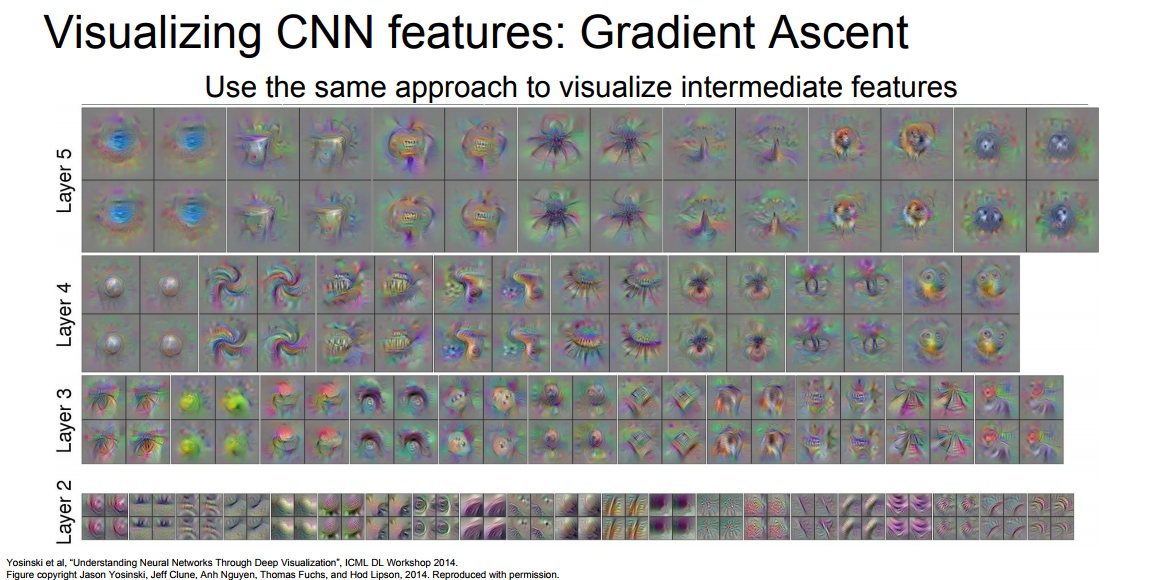

Gradient Ascent

Generate a synthetic image that maximally activates a neuron.

Reverse of gradient decent. Instead of taking the minimum it takes the maximum.

We want to maximize the neuron with the input image. So here instead we are trying to learn the image that maximize the activation:

Steps of gradient ascent

Initialize image to zeros.

Forward image to compute current scores.

Backprop to get gradient of neuron value with respect to image pixels.

Make a small update to the image

R(I)may equal to L2 of generated image.To get a better results we use a better regularizer:

penalize L2 norm of image; also during optimization periodically:

Gaussian blur image

Clip pixels with small values to 0

Clip pixels with small gradients to 0

A better regularizer makes out images cleaner!

The results in the latter layers seems to mean something more than the other layers.

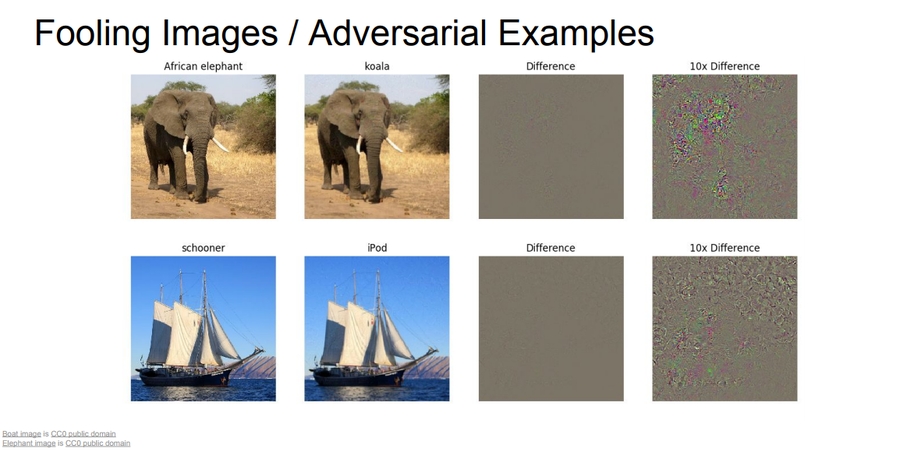

We can fool CNN by using this procedure:

Start from an arbitrary image.

# Random picture based on nothing.Pick an arbitrary class.

# Random classModify the image to maximize the class.

Repeat until network is fooled.

Results on fooling the network is pretty surprising!

For human eyes they are the same, but it fooled the network by adding just some noise!

DeepDream: Amplify existing features

Google released deep dream on their website.

What its actually doing is the same procedure as fooling the NN that we discussed, but rather than synthesizing an image to maximize a specific neuron, instead try to amplify the neuron activations at some layer in the network.

Steps:

Forward: compute activations at chosen layer.

# form an input image (Any image)Set gradient of chosen layer equal to its activation.

Equivalent to

I* = arg max[I] sum(f(I)^2)

Backward: Compute gradient on image.

Update image.

The code of deep dream is online you can download and check it yourself.

Feature Inversion

Gives us to know what types of elements parts of the image are captured at different layers in the network.

Given a CNN feature vector for an image, find a new image that:

Matches the given feature vector.

looks natural (image prior regularization)

Texture Synthesis

Old problem in computer graphics.

Given a sample patch of some texture, can we generate a bigger image of the same texture?

There is an algorithm which doesn't depend on NN:

Wei and Levoy, Fast Texture Synthesis using Tree-structured Vector Quantization, SIGGRAPH 2000

Its a really simple algorithm

The idea here is that this is an old problem and there are a lot of algorithms that has already solved it but simple algorithms doesn't work well on complex textures!

An idea of using NN has been proposed on 2015 based on gradient ascent and called it "Neural Texture Synthesis"

It depends on something called Gram matrix.

Neural Style Transfer = Feature + Gram Reconstruction

Gatys, Ecker, and Bethge, Image style transfer using Convolutional neural networks, CVPR 2016

Implementation by pytorch here.

Style transfer requires many forward / backward passes through VGG; very slow!

Train another neural network to perform style transfer for us!

Fast Style Transfer is the solution.

Johnson, Alahi, and Fei-Fei, Perceptual Losses for Real-Time Style Transfer and Super-Resolution, ECCV 2016

There are a lot of work on these style transfer and it continues till now!

Summary:

Activations: Nearest neighbors, Dimensionality reduction, maximal patches, occlusion

Gradients: Saliency maps, class visualization, fooling images, feature inversion

Fun: DeepDream, Style Transfer

13. Generative models

Generative models are type of Unsupervised learning.

Supervised vs Unsupervised Learning:

- Supervised LearningUnsupervised Learning

Data structure

Data: (x, y), and x is data, y is label

Data: x, Just data, no labels!

Data price

Training data is expensive in a lot of cases.

Training data are cheap!

Goal

Learn a function to map x -> y

Learn some underlying hidden structure of the data

Examples

Classification, regression, object detection, semantic segmentation, image captioning

Clustering, dimensionality reduction, feature learning, density estimation

Autoencoders are a Feature learning technique.

It contains an encoder and a decoder. The encoder downsamples the image while the decoder upsamples the features.

The loss are L2 loss.

Density estimation is where we want to learn/estimate the underlaying distribution for the data!

There are a lot of research open problems in unsupervised learning compared with supervised learning!

Generative Models

Given training data, generate new samples from same distribution.

Addresses density estimation, a core problem in unsupervised learning.

We have different ways to do this:

Explicit density estimation: explicitly define and solve for the learning model.

Learn model that can sample from the learning model without explicitly defining it.

Why Generative Models?

Realistic samples for artwork, super-resolution, colorization, etc

Generative models of time-series data can be used for simulation and planning (reinforcement learning applications!)

Training generative models can also enable inference of latent representations that can be useful as general features

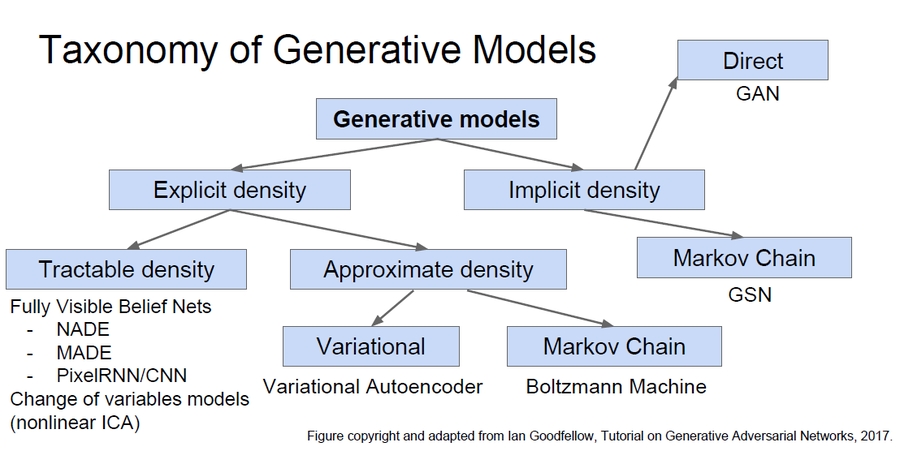

Taxonomy of Generative Models:

In this lecture we will discuss: PixelRNN/CNN, Variational Autoencoder, and GANs as they are the popular models in research now.

PixelRNN and PixelCNN

In a full visible belief network we use the chain rule to decompose likelihood of an image x into product of 1-d distributions

p(x) = sum(p(x[i]| x[1]x[2]....x[i-1]))Where p(x) is the Likelihood of image x and x[i] is Probability of i’th pixel value given all previous pixels.

To solve the problem we need to maximize the likelihood of training data but the distribution is so complex over pixel values.

Also we will need to define ordering of previous pixels.

PixelRNN

Founded by [van der Oord et al. 2016]

Dependency on previous pixels modeled using an RNN (LSTM)

Generate image pixels starting from corner

Drawback: sequential generation is slow! because you have to generate pixel by pixel!

PixelCNN

Also Founded by [van der Oord et al. 2016]

Still generate image pixels starting from corner.

Dependency on previous pixels now modeled using a CNN over context region

Training is faster than PixelRNN (can parallelize convolutions since context region values known from training images)

Generation must still proceed sequentially still slow.

There are some tricks to improve PixelRNN & PixelCNN.

PixelRNN and PixelCNN can generate good samples and are still active area of research.

Autoencoders

Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data.

Consists of Encoder and decoder.

The encoder:

Converts the input x to the features z. z should be smaller than x to get only the important values out of the input. We can call this dimensionality reduction.

The encoder can be made with:

Linear or non linear layers (earlier days days)

Deep fully connected NN (Then)

RELU CNN (Currently we use this on images)

The decoder:

We want the encoder to map the features we have produced to output something similar to x or the same x.

The decoder can be made with the same techniques we made the encoder and currently it uses a RELU CNN.

The encoder is a conv layer while the decoder is deconv layer! Means Decreasing and then increasing.

The loss function is L2 loss function:

L[i] = |y[i] - y'[i]|^2After training we though away the decoder.

# Now we have the features we need

We can use this encoder we have to make a supervised model.

The value of this it can learn a good feature representation to the input you have.

A lot of times we will have a small amount of data to solve problem. One way to tackle this is to use an Autoencoder that learns how to get features from images and train your small dataset on top of that model.

The question is can we generate data (Images) from this Autoencoder?

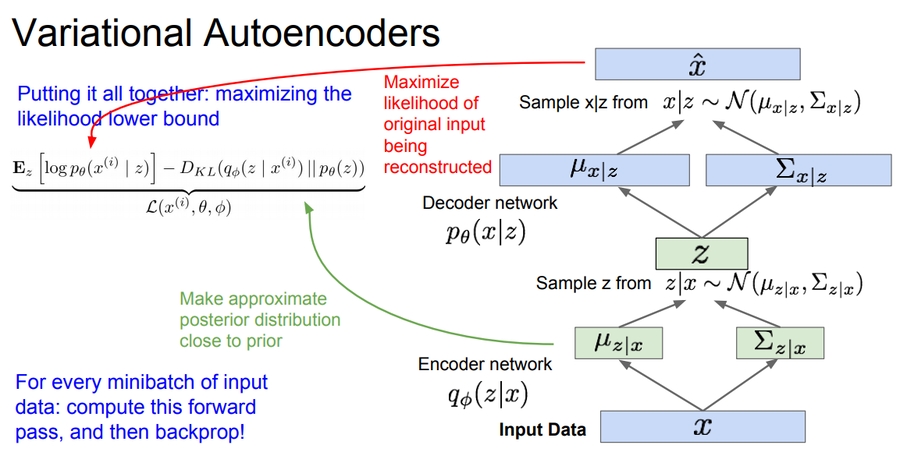

Variational Autoencoders (VAE)

Probabilistic spin on Autoencoders - will let us sample from the model to generate data!

We have z as the features vector that has been formed using the encoder.

We then choose prior p(z) to be simple, e.g. Gaussian.

Reasonable for hidden attributes: e.g. pose, how much smile.

Conditional p(x|z) is complex (generates image) => represent with neural network

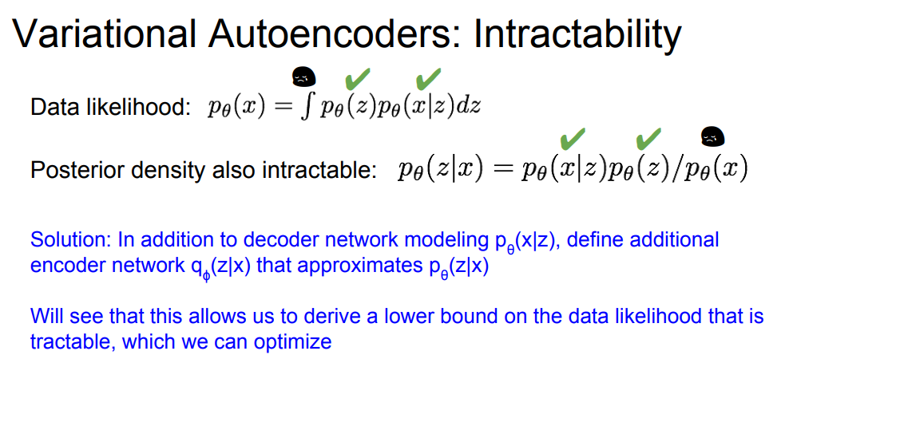

But we cant compute integral for P(z)p(x|z)dz as the following equation:

After resolving all the equations that solves the last equation we should get this:

Variational Autoencoder are an approach to generative models but Samples blurrier and lower quality compared to state-of-the-art (GANs)

Active areas of research:

More flexible approximations, e.g. richer approximate posterior instead of diagonal Gaussian

Incorporating structure in latent variables

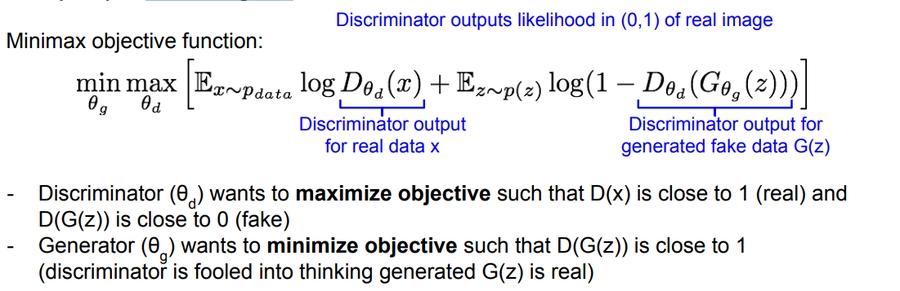

Generative Adversarial Networks (GANs)

GANs don’t work with any explicit density function!

Instead, take game-theoretic approach: learn to generate from training distribution through 2-player game.

Yann LeCun, who oversees AI research at Facebook, has called GANs:

The coolest idea in deep learning in the last 20 years

Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this as we have discussed!

Solution: Sample from a simple distribution, e.g. random noise. Learn transformation to training distribution.

So we create a noise image which are drawn from simple distribution feed it to NN we will call it a generator network that should learn to transform this into the distribution we want.

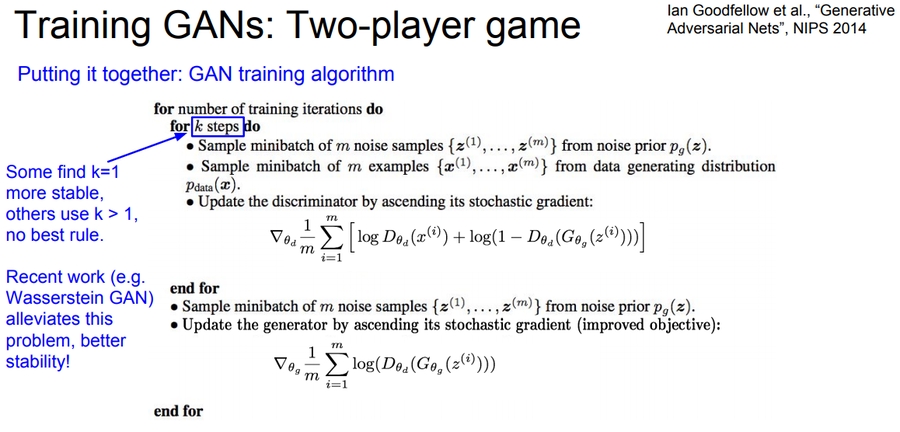

Training GANs: Two-player game:

Generator network: try to fool the discriminator by generating real-looking images.

Discriminator network: try to distinguish between real and fake images.

If we are able to train the Discriminator well then we can train the generator to generate the right images.

The loss function of GANs as minimax game are here:

The label of the generator network will be 0 and the real images are 1.

To train the network we will do:

Gradient ascent on discriminator.

Gradient ascent on generator but with different loss.

You can read the full algorithm with the equations here:

Aside: Jointly training two networks is challenging, can be unstable. Choosing objectives with better loss landscapes helps training is an active area of research.

Convolutional Architectures:

Generator is an upsampling network with fractionally-strided convolutions Discriminator is a Convolutional network.

Guidelines for stable deep Conv GANs:

Replace any pooling layers with strided convs (discriminator) and fractional-strided convs with (Generator).

Use batch norm for both networks.

Remove fully connected hidden layers for deeper architectures.

Use RELU activation in generator for all layers except the output which uses Tanh

Use leaky RELU in discriminator for all the layers.

2017 is the year of the GANs! it has exploded and there are some really good results.

Active areas of research also is GANs for all kinds of applications.

The GAN zoo can be found here: https://github.com/hindupuravinash/the-gan-zoo

Tips and tricks for using GANs: https://github.com/soumith/ganhacks

NIPS 2016 Tutorial GANs: https://www.youtube.com/watch?v=AJVyzd0rqdc

14. Deep reinforcement learning

This section contains a lot of math.

Reinforcement learning problems are involving an agent interacting with an environment, which provides numeric reward signals.

Steps are:

Environment --> State

s[t]--> Agent --> Actiona[t]--> Environment -->Reward r[t]+ Next states[t+1]--> Agent --> and so on..

Our goal is learn how to take actions in order to maximize reward.

An example is Robot Locomotion:

Objective: Make the robot move forward

State: Angle and position of the joints

Action: Torques applied on joints

1 at each time step upright + forward movement

Another example is Atari Games:

Deep learning has a good state of art in this problem.

Objective: Complete the game with the highest score.

State: Raw pixel inputs of the game state.

Action: Game controls e.g. Left, Right, Up, Down

Reward: Score increase/decrease at each time step

Go game is another example which AlphaGo team won in the last year (2016) was a big achievement for AI and deep learning because the problem was so hard.

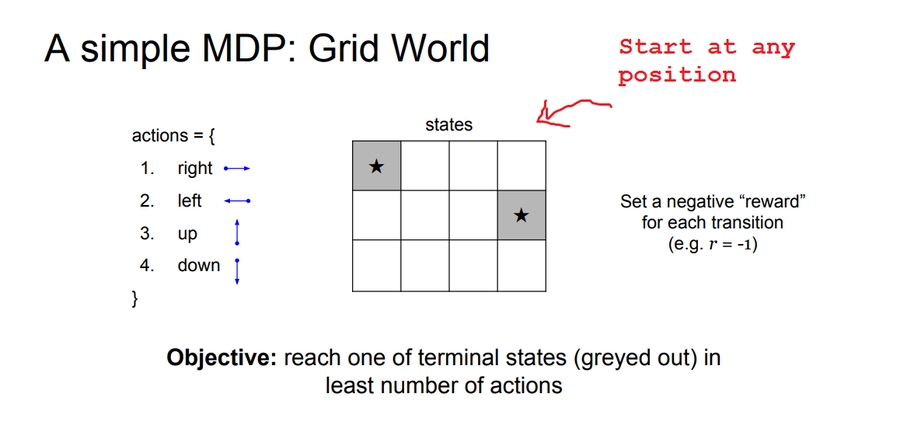

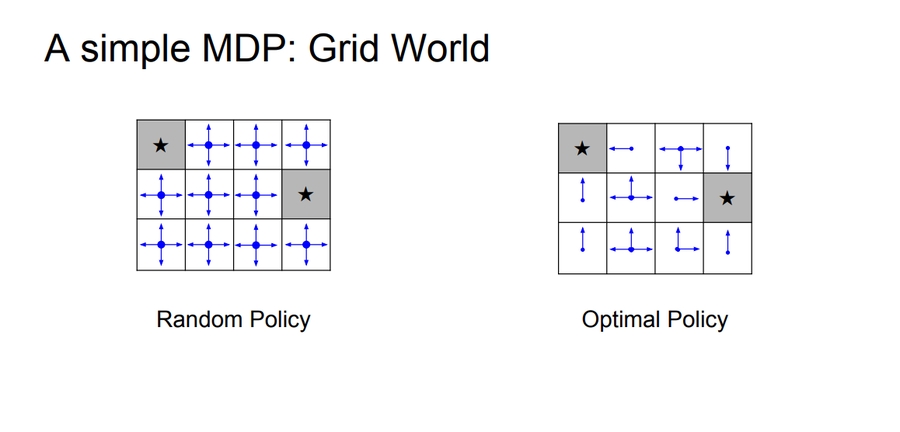

We can mathematically formulate the RL (reinforcement learning) by using Markov Decision Process

Markov Decision Process

Defined by (

S,A,R,P,Y) where:S: set of possible states.A: set of possible actionsR: distribution of reward given (state, action) pairP: transition probability i.e. distribution over next state given (state, action) pairY: discount factor# How much we value rewards coming up soon verses later on.

Algorithm:

At time step

t=0, environment samples initial states[0]Then, for t=0 until done:

Agent selects action

a[t]Environment samples reward from

Rwith (s[t],a[t])Environment samples next state from

Pwith (s[t],a[t])Agent receives reward

r[t]and next states[t+1]

A policy

piis a function from S to A that specifies what action to take in each state.Objective: find policy

pi*that maximizes cumulative discounted reward:Sum(Y^t * r[t], t>0)For example:

Solution would be:

The value function at state

s, is the expected cumulative reward from following the policy from states:V[pi](s) = Sum(Y^t * r[t], t>0) given s0 = s, pi

The Q-value function at state s and action

a, is the expected cumulative reward from taking actionain statesand then following the policy:Q[pi](s,a) = Sum(Y^t * r[t], t>0) given s0 = s,a0 = a, pi

The optimal Q-value function

Q*is the maximum expected cumulative reward achievable from a given (state, action) pair:Q*[s,a] = Max(for all of pi on (Sum(Y^t * r[t], t>0) given s0 = s,a0 = a, pi))

Bellman equation

Important thing is RL.

Given any state action pair (s,a) the value of this pair is going to be the reward that you are going to get r plus the value of the state that you end in.

Q*[s,a] = r + Y * max Q*(s',a') given s,a # Hint there is no policy in the equationThe optimal policy

pi*corresponds to taking the best action in any state as specified byQ*

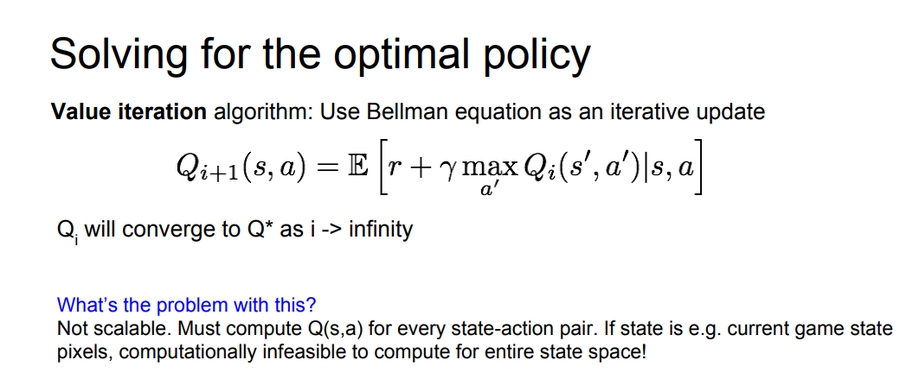

We can get the optimal policy using the value iteration algorithm that uses the Bellman equation as an iterative update

Due to the huge space dimensions in real world applications we will use a function approximator to estimate

Q(s,a). E.g. a neural network! this is called Q-learningAny time we have a complex function that we cannot represent we use Neural networks!

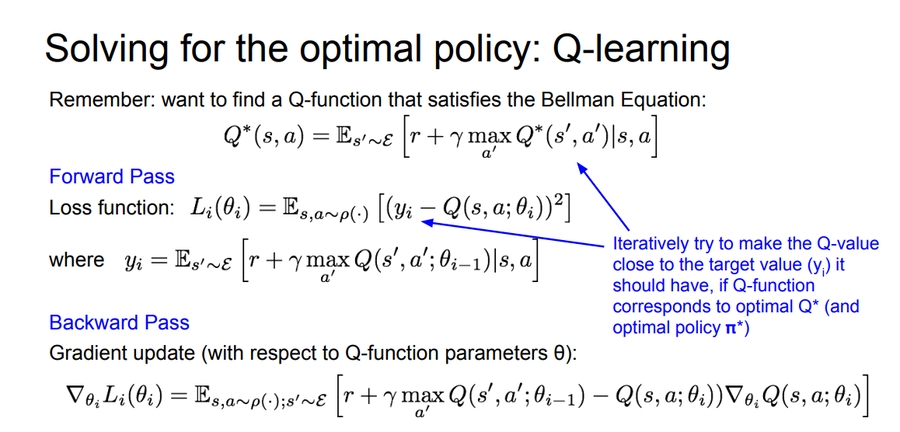

Q-learning

The first deep learning algorithm that solves the RL.

Use a function approximator to estimate the action-value function

If the function approximator is a deep neural network => deep q-learning

The loss function:

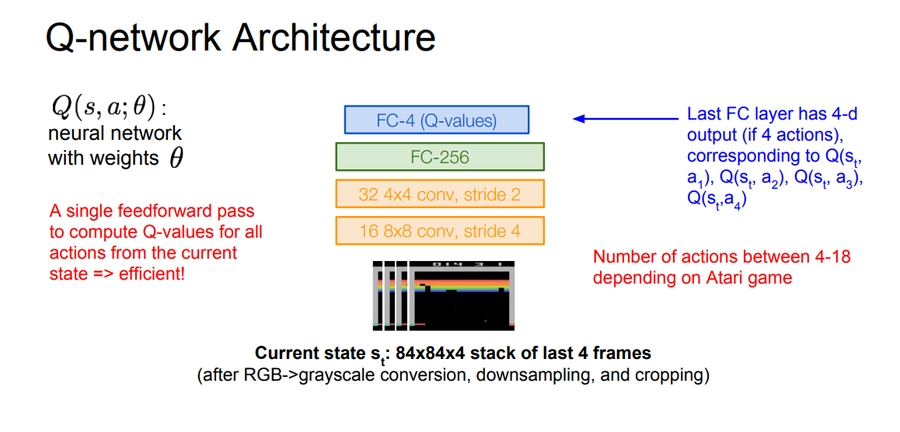

Now lets consider the "Playing Atari Games" problem:

Our total reward are usually the reward we are seeing in the top of the screen.

Q-network Architecture:

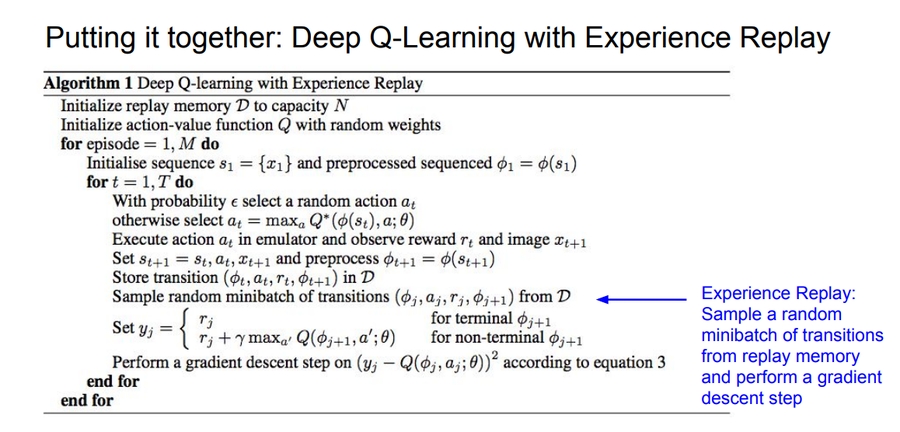

Learning from batches of consecutive samples is a problem. If we recorded a training data and set the NN to work with it, if the data aren't enough we will go to a high bias error. so we should use "experience replay" instead of consecutive samples where the NN will try the game again and again until it masters it.

Continually update a replay memory table of transitions (

s[t],a[t],r[t],s[t+1]) as game (experience) episodes are played.Train Q-network on random minibatches of transitions from the replay memory, instead of consecutive samples.

The full algorithm:

A video that demonstrate the algorithm on Atari game can be found here: "https://www.youtube.com/watch?v=V1eYniJ0Rnk"

Policy Gradients

The second deep learning algorithm that solves the RL.

The problem with Q-function is that the Q-function can be very complicated.

Example: a robot grasping an object has a very high-dimensional state.

But the policy can be much simpler: just close your hand.

Can we learn a policy directly, e.g. finding the best policy from a collection of policies?

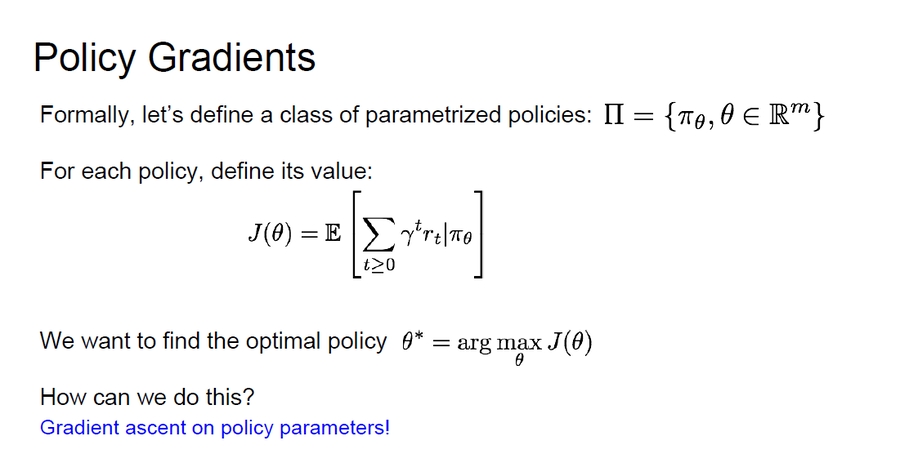

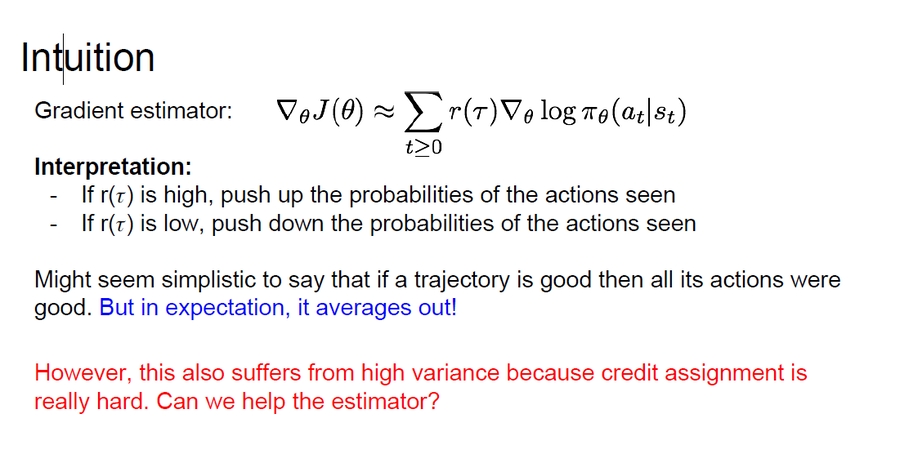

Policy Gradients equations:

Converges to a local minima of

J(ceta), often good enough!REINFORCE algorithm is the algorithm that will get/predict us the best policy

Equation and intuition of the Reinforce algorithm:

the problem was high variance with this equation can we solve this?

variance reduction is an active research area!

Recurrent Attention Model (RAM) is an algorithm that are based on REINFORCE algorithm and is used for image classification problems:

Take a sequence of “glimpses” selectively focusing on regions of the image, to predict class

Inspiration from human perception and eye movements.

Saves computational resources => scalability

If an image with high resolution you can save a lot of computations

Able to ignore clutter / irrelevant parts of image

RAM is used now in a lot of tasks: including fine-grained image recognition, image captioning, and visual question-answering

AlphaGo are using a mix of supervised learning and reinforcement learning, It also using policy gradients.

A good course from Standford on deep reinforcement learning

A good course on deep reinforcement learning (2017)

15. Efficient Methods and Hardware for Deep Learning

The original lecture was given by Song Han a PhD Candidate at standford.

Deep Conv nets, Recurrent nets, and deep reinforcement learning are shaping a lot of applications and changing a lot of our lives.

Like self driving cars, machine translations, alphaGo and so on.

But the trend now says that if we want a high accuracy we need a larger (Deeper) models.

The model size in ImageNet competation from 2012 to 2015 has increased 16x to achieve a high accurecy.

Deep speech 2 has 10x training operations than deep speech 1 and thats in only one year!

# At Baidu

There are three challenges we got from this

Model Size

Its hard to deploy larger models on our PCs, mobiles, or cars.

Speed

ResNet152 took 1.5 weeks to train and give the 6.16% accurecy!

Long training time limits ML researcher’s productivity

Energy Efficiency

AlphaGo: 1920 CPUs and 280 GPUs. $3000 electric bill per game

If we use this on our mobile it will drain the battery.

Google mentioned in thier blog if all the users used google speech for 3 minutes, they have to double thier data-center!

Where is the Energy Consumed?

larger model => more memory reference => more energy

We can improve the Efficiency of Deep Learning by Algorithm-Hardware Co-Design.

From both the hardware and the algorithm perspectives.

Hardware 101: the Family

General Purpose

# Used for any hardwareCPU

# Latency oriented, Single strong threaded like a single elepahntGPU

# Throughput oriented, So many small threads like a lot of ants

GPGPU

Specialized HW

#Tuned for a domain of applicationsFPGA# Programmable logic, Its cheaper but less effiecnet`

ASIC

# Fixed logic, Designed for a certian applications (Can be designed for deep learning applications)